by Achim Ledermüller | May 19, 2021 | Kubernetes, Tutorials

You want automated Fedora CoresOS updates for your Kubernetes? And what do Zincati and libostree have to do with it? Here you will quickly see an overview!

Fedora CoreOS is used as the operating system for many Kubernetes clusters. This operating system, which specializes in containers, scores particularly well with simple, automatic updates. Unlike usual, it is not updated package by package. Fedora CoreOS first creates a new, updated image of the system and finalizes the update with a reboot. rpm-ostree in combination with Cincinnati and Zincati ensures a smooth process.

Before we take a closer look at the components, let’s first clarify how you can enable automatic updates for your NWS Kubernetes cluster.

How do you activate automatic updates for your NWS Kubernetes Cluster?

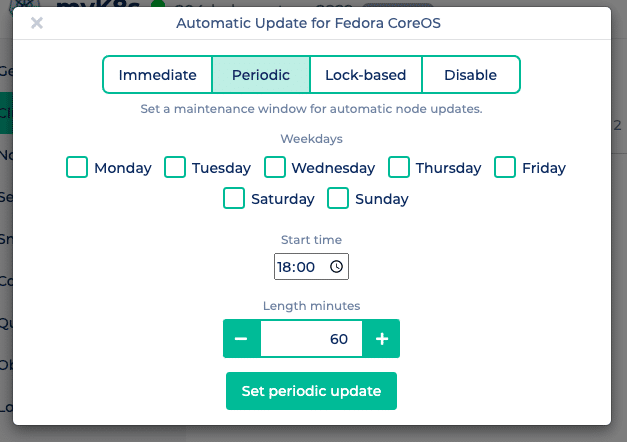

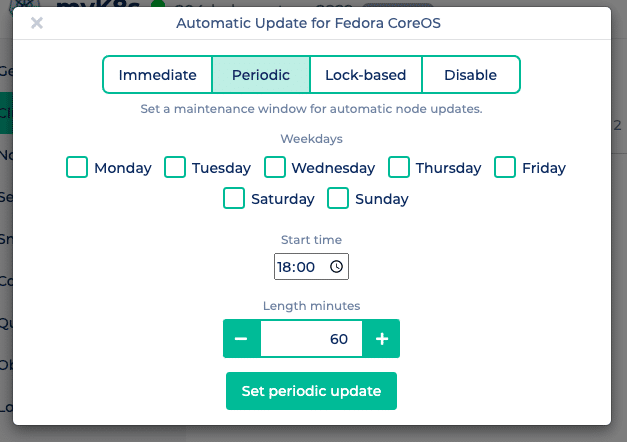

In the NWS portal you can easily choose between different update mechanisms. Click on “Update Fedora CoreOS” in the context menu of your Kubernetes cluster and choose between immediate, periodic and lock-based.

Immediate applies updates immediately to all of your Kubernetes nodes and finalizes the update with a reboot.

Immediate applies updates immediately to all of your Kubernetes nodes and finalizes the update with a reboot.

Periodic updates your nodes only during a freely selectable maintenance window. In addition to the days of the week, you can also specify the start time and the length of the maintenance window.

Lock-based uses the FleetLock protocol to coordinate the updates. Here, a lock manager is used to coordinate the finalization of updates. This ensures that nodes do not finalize and reboot updates at the same time. In addition, the update process is stopped in the event of problems and other nodes do not perform an update.

Disable deactivates automatic updates.

So far, so good! But what is rpm-ostree and Zincati?

Updates but different!

The introduction of container-based applications has also made it possible to standardize and simplify the underlying operating systems. Reliable, automatic updates and the control of these – by the operator of the application – additionally reduce the effort for maintenance and coordination.

rpm-ostree creates the images

rpm-ostree is a hybrid of libostree and libdnf and therefore a mixture of image and package system. libostree describes itself as a git for operating system binaries, with each commit containing a bootable file tree. A new release of Fedora CoreOS therefore corresponds to an rpm-ostree commit, maintained and provided by the CoreOS team. libdnf provides the familiar package management features, making the base provided by libostree extensible by users.

Taints and Tolerations

Nodes on which containers cannot be started or are unreachable are given a so-called taint by Kubernetes (e.g. not-ready or unreachable). As a counterpart, pods on such nodes are given a toleration. This also happens during a Fedora CoreOS update. Pods are automatically marked with

tolerationSeconds=300 on reboot, which will restart your pods on other nodes after 5 minutes. Of course, you can find more about taints and tolerations in the

Kubernetes documentation.

Cincinnati and Zincati distribute the updates

To distribute the rpm-ostree commits, Cincinnati and Zincati are used. The latter is a client that regularly asks the Fedora CoreOS Cincinnati server for updates. As soon as a suitable update is available, rpm-ostree prepares a new, bootable file tree. Depending on the chosen strategy, Zincati finalizes the update by rebooting the node.

What are the advantages?

Easy rollback

With libostree it is easy to restore the old state. For this, you just have to boot into the previous rpm-ostree commit. This can also be found as an entry in the grub bootloader menu.

Low effort

Fedora CoreOS can update itself without manual intervention. In combination with Kubernetes, applications are also automatically moved to the currently available nodes.

Flexible Configuration

Zincati offers a simple and flexible configuration that will hopefully allow any user to find a suitable update strategy.

Better Quality

The streamlined image-based approach makes it easier and more accurate to test each version as a whole.

Only time will tell whether this hybrid of image and package-based operating system will prevail. Fedora CoreOS – as the basis for our NMS Managed Kubernetes – significantly simplifies the update process while still providing our customers with straightforward control.

by Achim Ledermüller | Oct 14, 2020 | Kubernetes, Tutorials

You want to know how to get the IP addresses of your clients in your Kubernetes cluster? In five minutes you have an overview!

From HTTP client to application

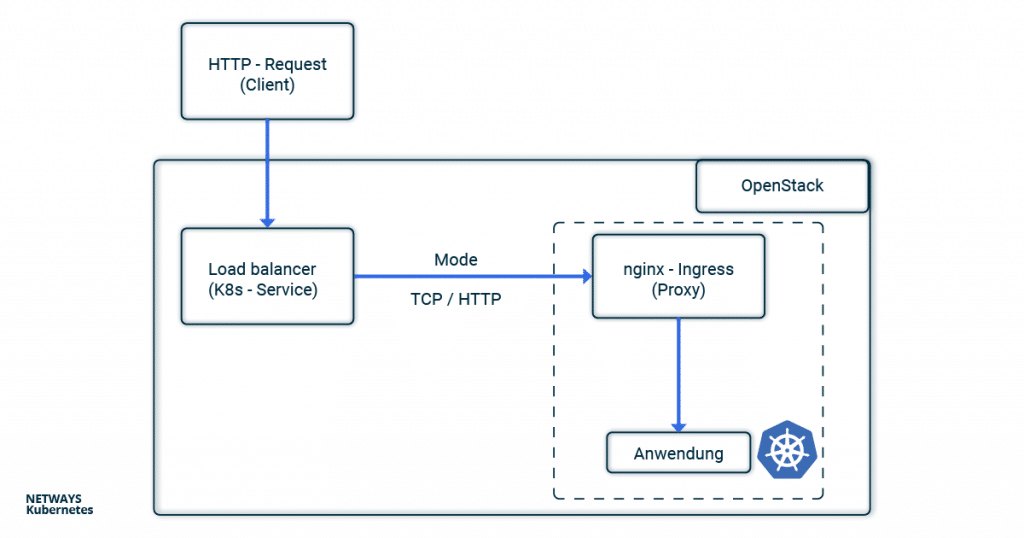

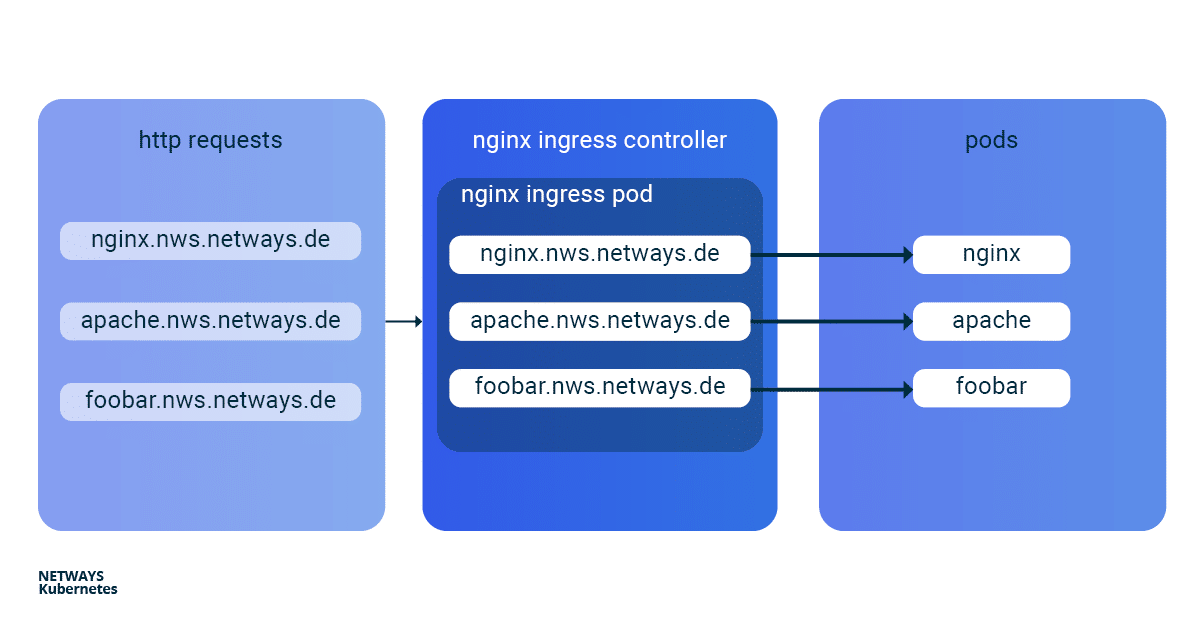

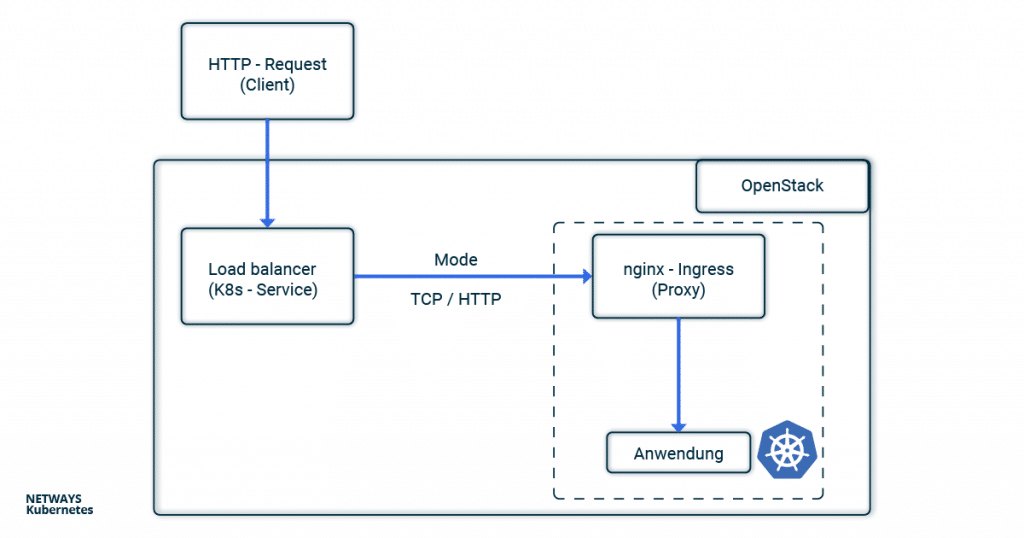

In the nginx-Ingress-Controller tutorial, we showed how to make an application publicly accessible. In the case of the NETWAYS Cloud, your Kubernetes cluster uses an Openstack load balancer, which forwards the client requests to an nginx ingress controller in the Kubernetes cluster. This then distributes all requests to the corresponding pods.

With all the pushing around and forwarding of requests, the connection details of the clients get lost without further configuration. Since the problem has not only arisen since Kubernetes, the tried and tested solutions X-Forwarded-For or Proxy-Protocol are used.

In order not to lose track in the buzzword bingo between service, load balancer, ingress, proxy, client and application, you can look at the path of an HTTP request from the client to the application through the components of a Kubernetes cluster in this example.

Client IP Addresses with X-forwarded-for

If you use HTTP, the client IP address can be stored in the X-forwarded-For (XFF) and transported further. XFF is an entry in the HTTP header and is supported by most proxy servers. In this example, the load balancer places the client IP address in the XFF entry and forwards the request. All other proxy servers and the applications can therefore recognise in the XFF entry from which address the request was originally sent.

In Kubernetes, the load balancer is configured via annotations in the service object. If you set loadbalancer.openstack.org/x-forwarded-for: true there, the load balancer is configured accordingly. Of course, it is also important that the next proxy does not overwrite the X-Forwarded-For header again. In the case of nginx, you can set the option use-Forwarded-headers in its ConfigMap.

---

# Service

kind: Service

apiVersion: v1

metadata:

name: loadbalanced-service

annotations:

loadbalancer.openstack.org/x-forwarded-for: "true"

spec:

selector:

app: echoserver

type: LoadBalancer

ports:

- port: 80

targetPort: 8080

protocol: TCP

---

# ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx

data:

use-forwarded-headers: "true"

Since the HTTP header is used, it is not possible to enrich HTTPS connections with the client IP address. Here, one must either terminate the TLS/SSL protocol at the load balancer or fall back on the proxy protocol.

Client Information with Proxy Protocol

If you use X-Forwarded-For, you are obviously limited to HTTP. In order to enable HTTPS and other applications behind load balancers and proxies to access the connection option of the clients, the so-called proxy protocol was invented. Technically, a small header with the client’s connection information is added by the load balancer. The next hop (here nginx) must of course also understand the protocol and handle it accordingly. Besides classic proxies, other applications such as MariaDB or postfix also support the proxy protocol.

To activate the proxy protocol, you must add the annotation loadbalancer.openstack.org/proxy-protocol to the service object. The protocol must also be activated for the accepting proxy.

---

# Service Loadbalancer

kind: Service

apiVersion: v1

metadata:

name: loadbalanced-service

annotations:

loadbalancer.openstack.org/proxy-protocol: "true"

spec:

selector:

app: echoserver

type: LoadBalancer

ports:

- port: 80

targetPort: 8080

protocol: TCP

---

# NGINX ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx

data:

use-proxy-protocol: "true"

In most cases, however, you will fall back on the Helm chart of the nginx ingress controller. There, a corresponding configuration is even easier.

nginx-Ingress-Controller and Helm

If you use Helm to install the nginx-ingress-controller, the configuration is very clear. The proxy protocol is activated for both the nginx and the load balancer via the Helm values file:

nginx-ingress.values:

---

controller:

config:

use-proxy-protocol: "true"

service:

annotations:

loadbalancer.openstack.org/proxy-protocol: true

type: LoadBalancer

$ helm install my-ingress stable/nginx-ingress -f nginx-ingress.values

The easiest way to test whether everything works as expected is to use the Google Echoserver. This is a small application that simply returns the HTTP request to the client. As described in the nginx-Ingress-Controller tutorial, we need a deployment with service and ingress. The former starts the echo server, the service makes it accessible in the cluster and the ingress configures the nginx so that the requests are forwarded to the deployment.

---

# Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: echoserver

spec:

selector:

matchLabels:

app: echoserver

replicas: 1

template:

metadata:

labels:

app: echoserver

spec:

containers:

- name: echoserver

image: gcr.io/google-containers/echoserver:1.8

ports:

- containerPort: 8080

---

# Service

apiVersion: v1

kind: Service

metadata:

name: echoserver-svc

spec:

ports:

- port: 80

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoserver

---

# Ingress

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: echoserver-ingress

spec:

rules:

- host: echoserver.nws.netways.de

http:

paths:

- backend:

serviceName: echoserver-svc

servicePort: 80

For testing purposes, it’s best to fake your /etc/hosts so that echoserver.nws.netways.de points to the public IP address of your nginx ingress controller. curl echoserver.nws.netways.de will then show you everything that the echo server knows about your client, including the IP address in the X-Forwarded-For header.

Conclusion

In the Kubernetes cluster, the proxy protocol is probably the better choice for most use cases. The well-known Ingress controllers support the proxy protocol and TLS/SSL connections can be configured and terminated in the K8s cluster. The quickest way to find out what information arrives at your application is to use Google’s echo server.

by Achim Ledermüller | Aug 19, 2020 | Kubernetes, Tutorials

You already know the most important building blocks for starting your application from our Tutorial-Serie. Are you still missing metrics and logs for your applications? After this blog post, you can tick off the latter.

Logging with Loki and Grafana in Kubernetes – an Overview

One of the best-known, heavyweight solutions for collecting and managing your logs is also available for Kubernetes. This usually consists of Logstash or Fluentd for collecting, paired with Elasticsearch for storing and Kibana or Graylog for visualising your logs.

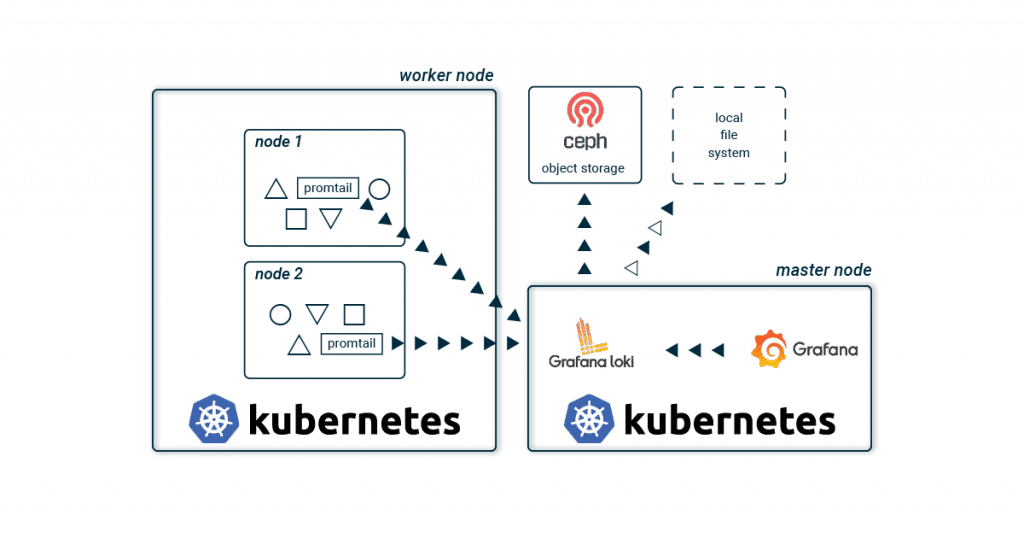

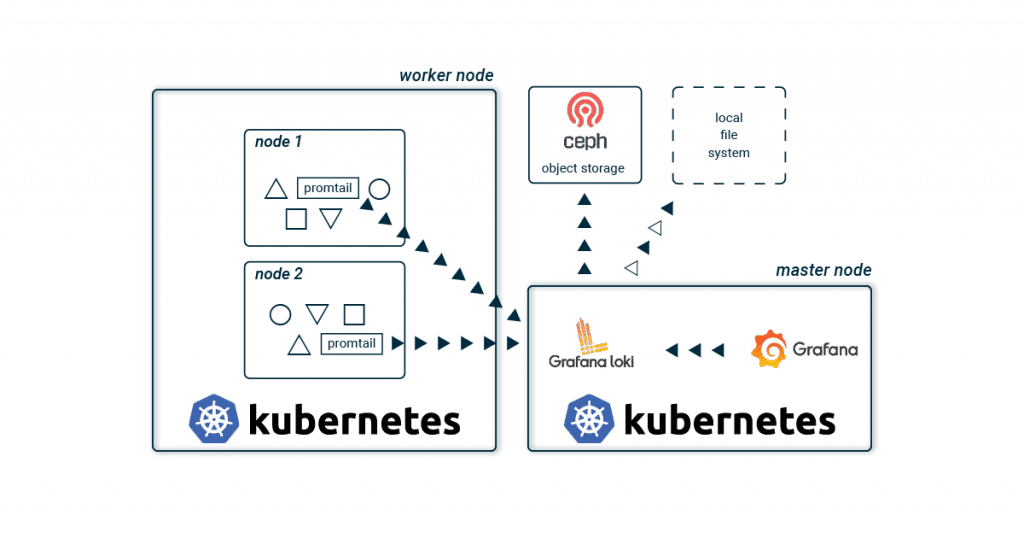

In addition to this classic combination, a new, more lightweight stack has been available for a few years now with Loki and Grafana! The basic architecture hardly differs from the familiar setups.

Promtail collects the logs of all containers on each Kubernetes node and sends them to a central Loki instance. This aggregates all logs and writes them to a storage back-end. Grafana is used for visualisation, which fetches the logs directly from the Loki instance.

The biggest difference to the known stacks is probably the lack of Elasticsearch. This saves resources and effort, and therefore no triple-replicated full-text index has to be stored and administered. And especially when you start to build up your application, a lean and simple stack sounds appealing. As the application landscape grows, individual Loki components are scaled up to spread the load across multiple servers.

No full text index? How does it work?

Of course, Loki does not do without an index for quick searches, but only metadata (similar to Prometheus) is indexed. This greatly reduces the effort required to run the index. For your Kubernetes cluster, Labels are therefore mainly stored in the index and your logs are automatically organised using the same metadata as your applications in your Kubernetes cluster. Using a time window and the Labels, Loki quickly and easily finds the logs you are looking for.

To store the index, you can choose from various databases. Besides the two cloud databases BigTable and DynamoDB, Loki can also store its index locally in Cassandra or BoltDB. The latter does not support replication and is mainly suitable for development environments. Loki offers another database, boltdb-shipper, which is currently still under development. This is primarily intended to remove dependencies on a replicated database and regularly store snapshots of the index in chunk storage (see below).

A quick example

A

pod produces two log streams with

stdout and

stderr. These log streams are split into so-called chunks and compressed as soon as a certain size has been reached or a time window has expired.

A chunk therefore contains compressed logs of a stream and is limited to a maximum size and time unit. These compressed data records are then stored in the chunk storage.

Label vs. Stream

A combination of exactly the same labels (including their values) defines a stream. If you change a label or its value, a new stream is created. For example, the logs from stdout of an nginx pod are in a stream with the labels: pod-template-hash=bcf574bc8,

app=nginx and stream=stdout.

In Loki’s index, these chunks are linked with the stream’s labels and a time window. A search in the index must therefore only be filtered by labels and time windows. If one of these links matches the search criteria, the chunk is loaded from the storage and the logs it contains are filtered according to the search query.

Chunk Storage

The compressed and fragmented log streams are stored in the chunk storage. As with the index, you can also choose between different storage back-ends. Due to the size of the chunks, an object store such as GCS, S3, Swift or our Ceph object store is recommended. Replication is automatically included and the chunks are automatically removed from the storage based on an expiry date. In smaller projects or development environments, you can of course also start with a local file system.

Visualisation with Grafana

Grafana is used for visualisation. Preconfigured dashboards can be easily imported. LogQL is used as the query language. This proprietary creation of Grafana Labs leans heavily on PromQL from Prometheus and is just as quick to learn. A query consists of two parts:

First, you filter for the corresponding chunks using labels and the Log Stream Selector. With = you always make an exact comparison and =~ allows the use of regex. As usual, the selection is negated with !

After you have limited your search to certain chunks, you can expand it with a search expression. Here, too, you can use various operators such as |= and |~ to further restrict the result. A few examples are probably the quickest way to show the possibilities:

Log Stream Selector:

{app = "nginx"}

{app != "nginx"}

{app =~ "ngin.*"}

{app !~ "nginx$"}

{app = "nginx", stream != "stdout"}

Search Expression:

{app = "nginx"} |= "192.168.0.1"

{app = "nginx"} != "192.168.0.1"

{app = "nginx"} |~ "192.*"

{app = "nginx"} !~ "192$"

Further possibilities such as aggregations are explained in detail in the official documentation of LogQL.

After this short introduction to the architecture and functionality of Grafana Loki, we will of course start right away with the installation. A lot more information and possibilities for Grafana Loki are of course available in the official documentation.

Get it running!

You would like to just try out Loki?

With the NWS Managed Kubernetes Cluster you can do without the details! With just one click you can start your Loki Stack and always have your Kubernetes Cluster in full view!

As usual with Kubernetes, a running example is deployed faster than reading the explanation. Using Helm and a few variables, your lightweight logging stack is quickly installed. First, we initialise two Helm repositories. Besides Grafana, we also add the official Helm stable charts repository. After two short helm repo add commands we have access to the required Loki and Grafana charts.

Install Helm

brew install helm

apt install helm

choco install kubernetes-helm

You don’t have the right sources? On helm.sh you will find a brief guide for your operating system.

helm repo add loki https://grafana.github.io/loki/charts

helm repo add stable https://kubernetes-charts.storage.googleapis.com/

Install Loki and Grafana

For your first Loki stack you do not need any further configuration. The default values fit very well and helm install does the rest. Before installing Grafana, we first set its configuration using the well-known helm values files. Save them with the name grafana.values.

In addition to the password for the administrator, Loki that has just been installed is also set as the data source. For visualisation, we import a dashboard and the required plugins. And hence you install a Grafana configured for Loki and can get started directly after the deploy.

grafana.values:

---

adminPassword: supersecret

datasources:

datasources.yaml:

apiVersion: 1

datasources:

- name: Loki

type: loki

url: http://loki-headless:3100

jsonData:

maxLines: 1000

plugins:

- grafana-piechart-panel

dashboardProviders:

dashboardproviders.yaml:

apiVersion: 1

providers:

- name: default

orgId: 1

folder:

type: file

disableDeletion: true

editable: false

options:

path: /var/lib/grafana/dashboards/default

dashboards:

default:

Logging:

gnetId: 12611

revison: 1

datasource: Loki

The actual installation is done with the help of helm install. The first parameter is a freely selectable name. With its help, you can also quickly get an overview:

helm install loki loki/loki-stack

helm install loki-grafana stable/grafana -f grafana.values

kubectl get all -n kube-system -l release=loki

After deployment, you can log in as admin with the password supersecret. To be able to access the Grafana Webinterface directly, you still need a port-forward:

kubectl --namespace kube-system port-forward service/loki-grafana 3001:80

The logs of your running pods should be immediately visible in Grafana. Try the queries under Explore and discover the dashboard!

Logging with Loki and Grafana in Kubernetes – the Conclusion

With Loki, Grafana Labs offers a new approach to central log management. The use of low-cost and easily available object stores makes the time-consuming administration of an Elasticsearch cluster superfluous. The simple and fast deployment is also ideal for development environments. While the two alternatives Kibana and Graylog offer a powerful feature set, for some administrators Loki with its streamlined and simple stack may be more enticing.

by Achim Ledermüller | Jun 24, 2020 | Kubernetes, Tutorials

You want to create a persistent volume in Kubernetes? Here you can learn how it works with Openstack Cinder in a NWS Managed Kubernetes plan.

Pods and containers are by definition more or less ephemeral components in a Kubernetes cluster and are created and destroyed as needed. However, many applications such as databases can rarely be operated meaningfully without long-lived storage. With the industry-standard Container Storage Interface (CSI) spec, Kubernetes offers an abstraction for different storage backends for the integration of persistent volumes.

In case of our Managed Kubernetes solution, we use the Openstack component Cinder to provide persistent volumes for pods. The CSI Cinder controller is already active in NWS Kubernetes clusters starting with version 1.18.2. You can start using persistent volumes with only a few K8s objects.

Creating Persistent Volumes with CSI Cinder Controller

Before you can create a volume, a StorageClass must be created with Cinder as the provisioner. As usual, the K8s objects are applied to your cluster in the YAML format and by using kubectl apply:

storageclass.yaml:

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: cinderstorage

provisioner: cinder.csi.openstack.org

allowVolumeExpansion: true

With get and describe you can check whether the creation was successful:

kubectl apply -f storageclass.yaml

kubectl get storageclass

kubectl describe storageclass cinderstorage

With the help of this storage class, you can create as many volumes as your quota allows.

Persistent Volume (PV) and Persistent Volume Claim (PVC)

You can create a new volume with the help of a persistentVolumeClaim. The PVC claims a persistentVolume resource for you. If no sufficiently sized PV is available, it is dynamically created by the Cinder CSI Controller. PVC and PV are bound to each other and are exclusively available for you. The attached PV by default will follow the life-cycle of the pvc. So deleting a PVC will permanently remove it’s associated PV as well. This behaviour can be overridden in the StorageClass defined above with the help of the reclaimPolicy.

pvc.yaml:

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-documentroot

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: cinderstorage

In addition to the name, other properties such as size and accessMode are defined in the PVC-Object. After you’ve created the volume with kubectl apply, a new volume is automatically created in the appropriate storage backend managed by cinder. In the case of our NETWAYS Managed Kubernetes, Cinder creates a RBD-volume in the Ceph cluster. In the next step, we’ll mount the new volume in the document root of a Nginx pod to make the website’s data persistent.

To make sure the PVC creation was successful. You can describe the new resource as follows. The status must be bound and the events show “ProvisioningSucceeded” in order for the next step to work.

kubectl describe pvc nginx-documentroot

Pods and persistent Volumes

Usually, volumes are defined in the context of a pod and therefore have the same life cycle as them. However, if you want to use a volume that is independent of the pod and container, you can reference the PVC you just created in the volumes section and then include it in the container under volumeMounts. In this example, the document root of a Nginx is replaced.

deployment.yaml:

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels:

app: nginx

spec:

selector:

matchLabels:

app: nginx

strategy:

type: Recreate

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

ports:

- containerPort: 80

protocol: TCP

volumeMounts:

- mountPath: /usr/share/nginx/html

name: documentroot

volumes:

- name: documentroot

persistentVolumeClaim:

claimName: nginx-documentroot

readOnly: false

service.yaml:

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

name: http

selector:

app: nginx

Kubernetes and the CSI Cinder Controller naturally ensure that your new volume and the associated pods are always started at the same worker node. With kubectl you can also quickly adjust the index.html, port-foward the services port and access your newly created index.html living in the persistent volume:

kubectl exec -it deployment/nginx — bash -c ‘echo “CSI FTW” > /usr/share/nginx/html/index.html’

kubectl port-forward service/nginx-svc 8080:80

Conclusion

With the CSI Cinder Controller, you can create and manage persistent volumes quickly and easily. Further features for creating snapshots or enlarging volumes are already included. And options such as Multinode Attachment re already being planned. So nothing stands in the way of your database cluster in Kubernetes and the next exciting topic in our Kubernetes Blog series has been decided!

by Achim Ledermüller | May 20, 2020 | Kubernetes, Tutorials

With the first steps in Kubernetes, you already know how to launch applications in your Kubernetes cluster. Now we will expose your application online. How the whole thing works and how you can best get started yourself with a Kubernetes Nginx Ingress Controller is explained below with an example.

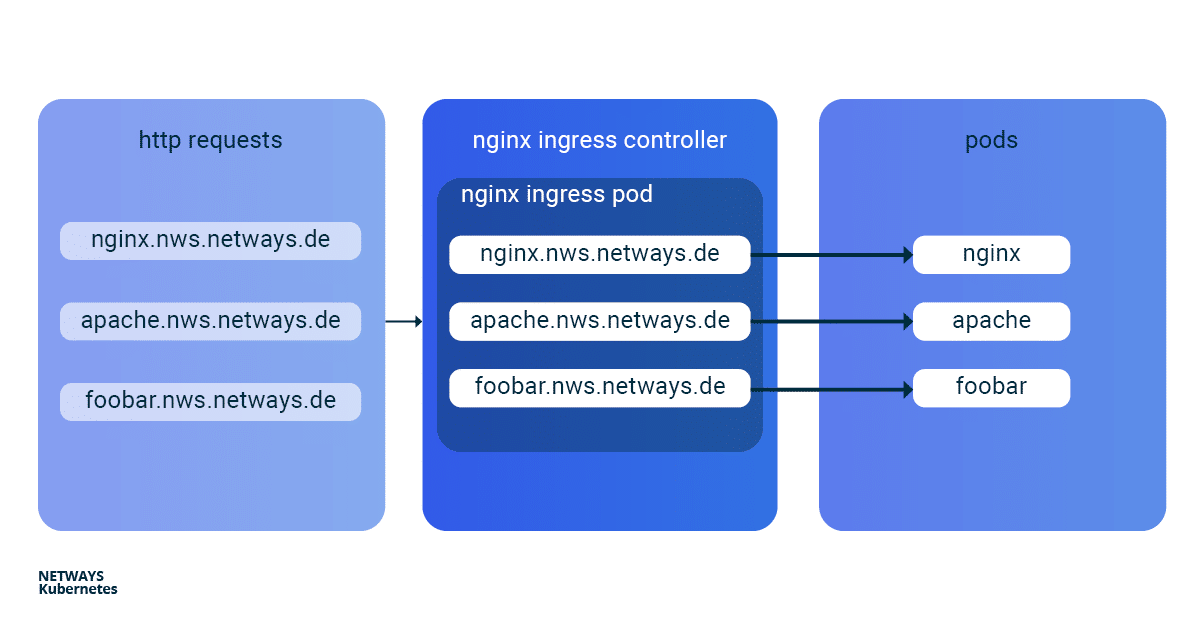

To make applications accessible from the outside in a Kubernetes cluster, you can use a load balancer type service. In the NETWAYS Cloud, we start an Openstack Octavia LB with a public IP in the background and forward the incoming traffic to the pods (bingo). So, we need a separate load balancer with public IP for each application. In order to be able to work more resource- and cost-efficiently in a case like this, named-based virtual hosts and server name indication (sni) were developed a long time ago. The well-known NGINX web server supports both and, as a Kubernetes ingress controller, it can make all our http/s applications quickly and easily accessible with only one public IP address.

The installation and updating of the Ningx Ingress Controller is very simplified thanks to a helmet chart. With K8s Ingress objects, you configure the mapping of vHosts, URI paths and TLS certificates to K8s services and consequently to our applications. So that the buzzwords don’t prevent you from seeing the essentials, here is a brief overview of how the HTTP requests are forwarded to our applications:

Installation of Kubernetes Nginx Ingress Controller

For easy installation of the Kubernetes Nginx Ingress Controller, you should use Helm. Helm describes itself as a package manager for Kubernetes applications. Besides installation, Helm also offers easy updates of its applications. As with kubectl, you only need the K8s config to get started:

helm install my-ingress stable/nginx-ingress

With this command Helm starts all necessary components in the default namespace and gives them the label my-ingress. A deployment, a replicaset and a pod are created for the Nginx Ingress Controller. All http/s requests must be forwarded to this pod so that it can sort the requests based on vHosts and URI paths. For this purpose a service of the type loadbalancer was created, which listens for a public IP and forwards the incoming traffic on ports 443 and 80 to our pod. A similar construct is also created for the default-backend, which I will not go into here. So that you don’t lose the overview, you can display all the components involved with kubectl:

kubectl get all -l release=my-ingress #with default-backend

kubectl get all -l release=my-ingress -l component=controller #without default-backend

NAME READY STATUS RESTARTS

pod/my-ingress-nginx-ingress-controller-5b649cbcd8-6hgz6 1/1 Running 0

NAME READY UP-TO-DATE AVAILABLE

deployment.apps/my-ingress-nginx-ingress-controller 1/1 1 1

NAME DESIRED CURRENT READY

replicaset.apps/my-ingress-nginx-ingress-controller-5b649cbcd8 1 1 1

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)

service/my-ingress-nginx-ingress-controller LoadBalancer 10.254.252.54 185.233.188.56 80:32110/TCP,443:31428/TCP

Example Applications: Apache und Nginx

Next, we start two simple example applications. In this example, I use Apache and Nginx. The goal is to make both applications available under their own name-based virtual hosts: nginx.nws.netways.de und apache.nws.netways.de. In order for the two deployments to be accessible within the K8s cluster, we still need to connect each of them with a service.

K8s Deployments

Nginx Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

Apache Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: apache-deployment

labels:

app: apache

spec:

replicas: 3

selector:

matchLabels:

app: apache

template:

metadata:

labels:

app: apache

spec:

containers:

- name: apache

image: httpd:2.4

ports:

- containerPort: 80

K8s Service

Nginx Service

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

name: http

selector:

app: nginx

Apache Service

apiVersion: v1

kind: Service

metadata:

name: apache-svc

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

name: http

selector:

app: apache

Virtual Hosts without TLS

In order to pass the requests from the Nginx controller to our applications, we need to roll out a suitable Kubernetes Ingress object. In the

spec section of the Ingress object we can define different paths and

virtuell Hosts. In this example we see

vHosts for

nginx.nws.netways.de and

apache.nws.netways.de. For each of the two vHosts, the corresponding

service is of course entered in the

backend area.

The public IP can be found in the service of the Nginx Ingress Controller and kubectl describe shows all important details about the service (see below). For testing, it is best to modify its /etc/hosts file and enter the IP of LoadBalancer Ingress there.

K8s Ingress

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: my-ingress

spec:

rules:

- host: apache.nws.netways.de

http:

paths:

- backend:

serviceName: apache-svc

servicePort: 80

- host: nginx.nws.netways.de

http:

paths:

- backend:

serviceName: nginx-svc

servicePort: 80

kubectl describe service/my-ingress-nginx-ingress-controller

kubectl get service/my-ingress-nginx-ingress-controller -o jsonpath='{.status.loadBalancer.ingress[].ip}’

Virtual Hosts with TLS

Of course, you rarely offer applications publicly accessible without encryption. Especially for TLS certificates, Kubernetes has its own type

tls within the

secret object. All you need is a TLS certificate and the corresponding key. With

kubectl you can store the pair in Kubernetes:

kubectl create secret tls my-secret –key cert.key –cert cert.crt

The created secret can then be referenced by the specified name my-secret in spec of the Ingress object. To do this, enter our virtual host and the corresponding TLS certificate in the hosts array within tls. An automatic redirect from http to https is activated from the beginning.

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: my-ingress

spec:

tls:

- hosts:

- apache.nws.netways.de

- nginx.nws.netways.de

secretName: my-secret

rules:

- host: apache.nws.netways.de

http:

paths:

- backend:

serviceName: apache-svc

servicePort: 80

- host: nginx.nws.netways.de

http:

paths:

- backend:

serviceName: nginx-svc

servicePort: 80

Conclusion

With the Nginx Ingress Controller it is easy to make your web-based applications publicly accessible. The features and configuration options offered should cover the requirements of all applications and can be found in the official User Guide. Besides your own application, you only need a Helm Chart and a K8s Ingress object. Kubernetes also manages to hide many complex layers and technologies with only a few abstract objects like deployment and ingress. With a NETWAYS Managed Kubernetes solution, you can take full advantage of this abstraction and focus on your own application. So, get started!

Immediate applies updates immediately to all of your Kubernetes nodes and finalizes the update with a reboot.

Immediate applies updates immediately to all of your Kubernetes nodes and finalizes the update with a reboot.

Recent Comments