Cloud GPUs

Process Large Data In No Time.

- NVIDIA® A10 GPU

- AI Frameworks

- GDDR6 Memory

Ready in minutes

NETWAYS Cloud GPUs

Hosted and made with love in Germany.

NVIDIA® TensorRT™ cores

NVIDIA® A10 GPU

AI Frameworks

PCI Passthrough

GDDR6 Memory

Powered by OpenStack

Cloud GPUs Pricing

604.44 €

per month

- 24 GB RAM

- 600 GB/s Memory Bandwidth

Pay-as-you-go | Scale on demand |Cancel at any time

* This is a rough estimate based on our current prices. The expansion of services may lead to increased costs. Please see our pricing page for detailed informations.

The Advantages of GPUs

Meeting today’s challenges in design, creativity and science, the new GPUs (Graphics Processing Units) combine professional graphics with processing power and AI (Artificial Intelligence) acceleration. Whether 3D rendering, machine learning, or AI: process large or complex amount of data in no time with the NVIDIA A10 graphics card!

Parallel Processing

Lower Costs

High Performance

Use-Case Scenarios

Machine Learning

In machine learning and deep learning tasks, GPUs are essential for accelerating model training and inference. GPU passthrough in cloud environments allows you to access dedicated GPUs for your workloads:

You can train complex machine learning models more quickly and efficiently by leveraging the power of dedicated GPUs. GPU passthrough ensures that VMs have direct access to the GPU’s computational resources, reducing training times.

Remote Workstations

Professionals who require powerful workstations for tasks like data analysis, 3D rendering, and software development can access GPU-passthrough-powered remote workstations from anywhere, enabling flexible and remote work options.

It helps data analysts and scientists to leverage GPUs for data visualization and analysis, enabling faster processing of large datasets and real-time visualizations.

No limits for your data processing

Fast data processing

There is always more need for speed and our GPUs are a great way to bypass processing limitations.

ISO-27001 certified Datacenters

All data is processed and store GDPR compliant in ISO-27001 datacenters located in Germany.

Do more with your Cloud GPUs

Make your cloud project a great fit by choosing only the resources that you need, in exactly the right size and amount, and scaling out and in anytime. Our NETWAYS Cloud Services based on OpenStack offer many compute, storage and network resources designed with newest technology. Create your modern IT infrastructure with ease.

Fly high with our Cloud

Cloud Services are a crucial part of modern computing, offering a flexible and scalable virtual infrastructure. With us, as a GDPR-compliant hosting provider with ISO-27001 certified data centers in Germany, you can launch your reliable Cloud Services in just minutes!

Flexible

Dynamically adapt to changing requirements. We provide the resources, as needed. Ready, whenever you are.

Scalable

We’re built for scale out – and so is our NWS Cloud, based on OpenStack. With us, even sky is not the limit.

Support(ive)

GDPR Compliant

Pay-as-you-go

Get things done with us in a timely manner and pay only for what you use. Saving costs? Sounds savvy.

Focus On You

Usage Examples

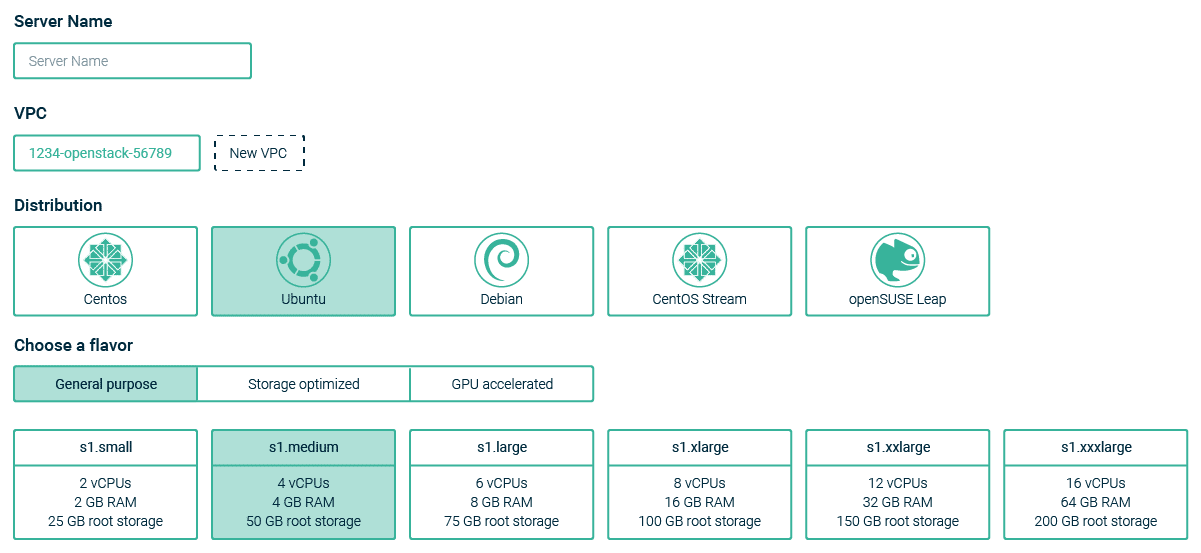

Create Your Instance using a Command Line Interface (CLI), the REST-API, or our Webinterface.

OpenStack CLI

Use the OpenStack CLI command to create a new instance with a GPU attached via PCI-Passthrough.

openstack server create --image 'Ubuntu Jammy 22.04 LTS' --flavor g1.xlarge --network vpcnetwork myinstance

REST-API

The provided REST-API allows you to create an OpenStack instance. There are also libraries for the most popular programming languages available.

curl -X POST https://cloud.netways.de:8774/v2.1/servers -H "Content-Type: application/json" -H "X-Auth-Token: $TOKEN" -d '{ "server": { "name": "'myinstance'", "flavorRef": "'$FLAVOR_ID'", "imageRef": "'$IMAGE_ID'", "networks": [ { "uuid": "'$NETWORK_ID'" } ] } }'

Webinterface

Create your Cloud Server using the NETWAYS Web Services.

Nice to know

What does GPU stand for?

GPU stands for Graphics Processing Unit. It is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device. Originally developed to accelerate 3D graphics rendering, GPUs have evolved to be used for a wide range of computationally intensive tasks, such as scientific simulations, machine learning, and cryptocurrency mining. Compared to CPUs, GPUs have many more cores, which allows them to perform many more calculations in parallel, making them much faster for certain types of processing tasks.

Is using GPUs a new hype or pretty common?

Using GPUs (Graphics Processing Units) for general-purpose computing is not a new concept, but it has become increasingly common in recent years due to the rise of deep learning, big data, and other computationally intensive applications.

In the past, GPUs were primarily used for graphics rendering, but their parallel processing capabilities made them well-suited for other types of applications that require heavy computation, such as scientific simulations and data processing. However, the high cost of GPUs and the specialized programming skills required to use them effectively limited their widespread adoption for these applications.

In recent years, however, the development of libraries and frameworks, such as CUDA and Tensorflow, has made it easier to program and use GPUs for a wider range of applications. Additionally, advancements in GPU hardware, such as increased memory bandwidth and the development of specialized processors, have made GPUs more powerful and efficient, further driving their adoption.

So while using GPUs for general-purpose computing is not a new concept, their increased adoption in recent years can be seen as a reflection of the growing need for high-performance computing in many fields, as well as the ongoing development and refinement of GPU technology and software.

How much faster is a GPU compared to a CPU?

For tasks that can be parallelized and require large amounts of data processing, GPUs can be significantly faster than CPUs. This is because GPUs are designed with many more processing cores than CPUs, which allows them to perform many more calculations in parallel.

For example, tasks such as image and video processing, scientific simulations, and machine learning can see significant speed improvements when using GPUs over CPUs. In some cases, the speedup can be hundreds of times faster.

Who can I contact in Case of a Problem?

Contact Us!

You have any requests or questions, or just want to say ‘hi’? Get in touch with us! We are happy to hear from you! Send us a message and we’ll see you in a bit!