Manage Kubernetes Nodegroups

As of this week, our customers can use the “node group feature” for their NWS Managed Kubernetes Cluster plan. What are node groups and what can I do with them? Our seventh blog post in the series explains this and more.

What are Node Groups?

With node groups, it is possible to create several Kubernetes node groups and manage them independently of each other. A node group describes a number of virtual machines that have various attributes as a group. Essentially, it determines which flavour – i.e. which VM-Model – is to be used within this group. However, other attributes can also be selected. Each node group can be scaled vertically at any time independently of the others.

Why Node Groups?

Node groups are suitable for executing pods on specific nodes. For example, it is possible to define a group with the “availability zone” attribute. In addition to the already existing “default-nodegroup”, which distributes the nodes relatively arbitrarily across all availability zones. Further node groups can be created, each of which is explicitly started in only one availability zone. Within the Kubernetes cluster, you can divide your pods into the corresponding availability zones or node groups.

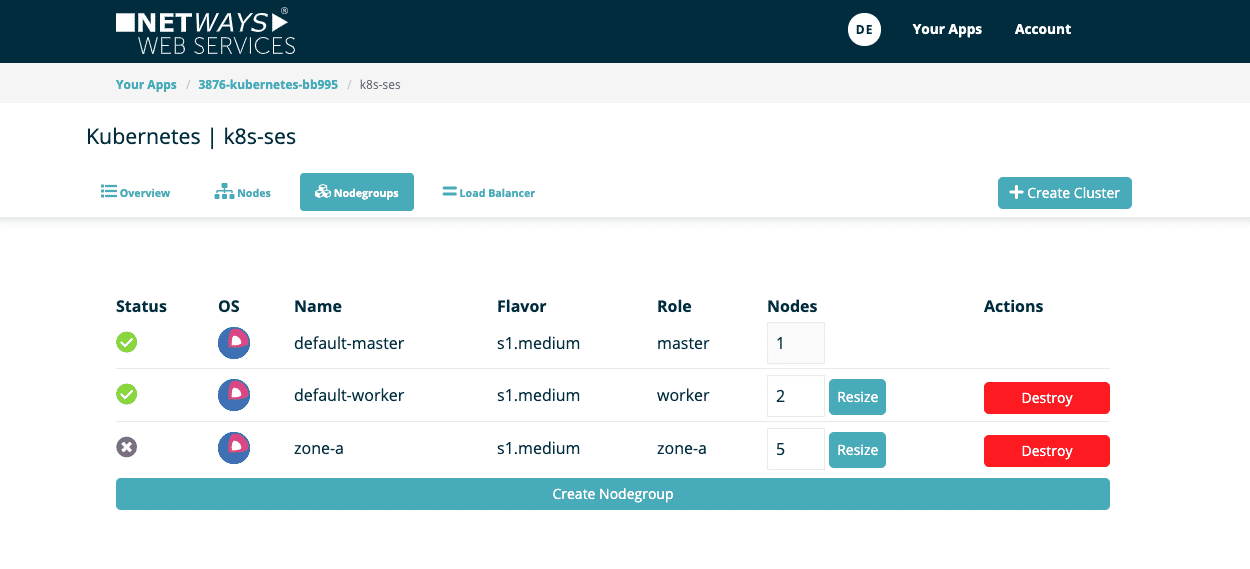

Show existing Node Groups

The first image shows our exemplary Kubernetes cluster “k8s-ses”. This currently has two nodegroups: “default-master” and “default-worker”.

Create a Node Group

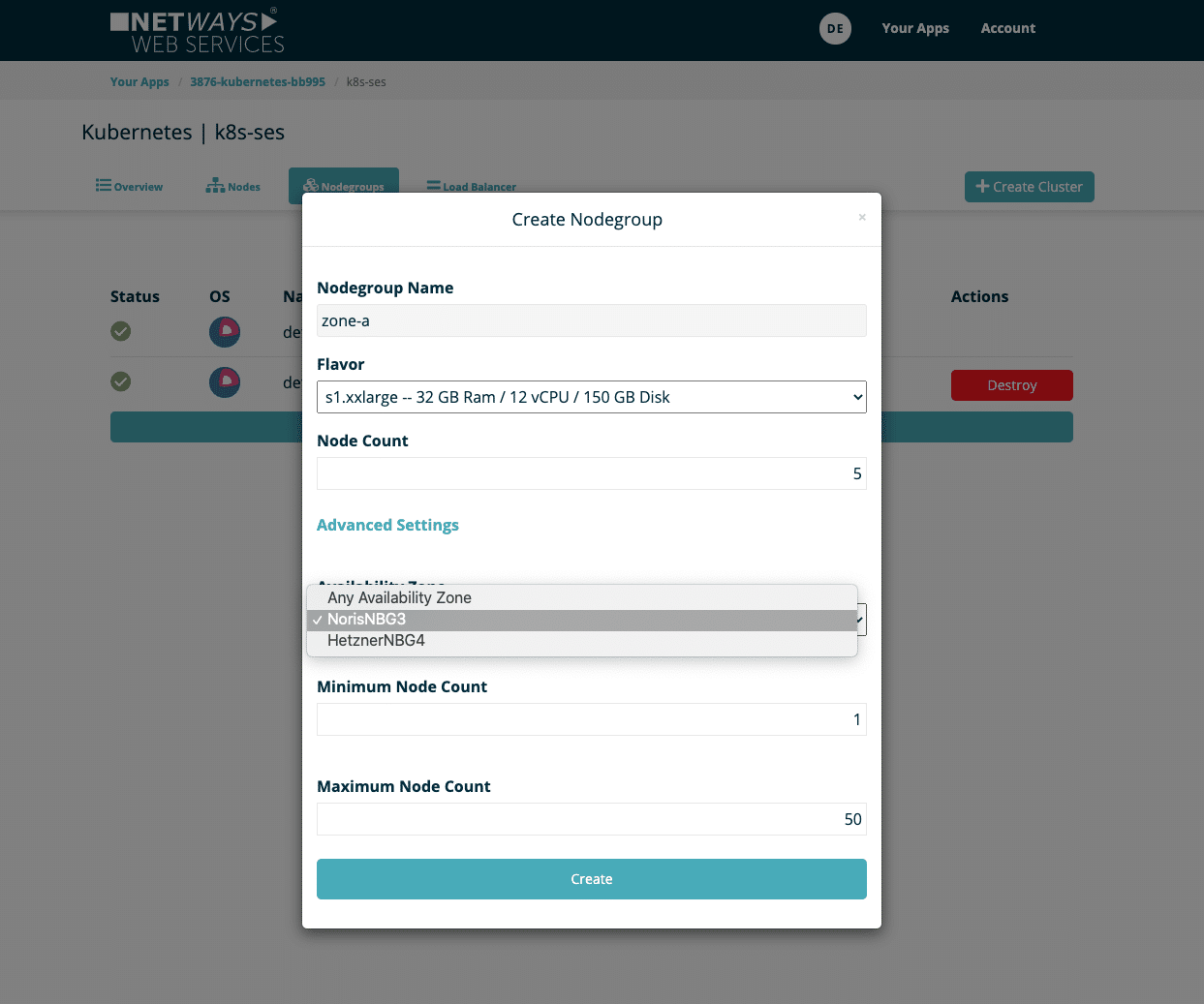

A new nodegroup can be created via the ‘Create Nodegroup’ dialogue with the following options:

- Name: Name of the nodegroup, which can later be used as a label for K8s

- Flavor: Size of the virtual machines used

- Node Count: Number of initial nodes, can be increased and decreased later at any time

- Availability Zone: A specific availability zone

- Minimum Node Count: The node group must not contain fewer nodes than the defined value

- Maximium Node Count: The node group cannot grow to more than the specified number of nodes

The last two options are particularly decisive for AutoScaling and therefore limit the automatic mechanism.

You will then see the new node group in the overview. Provisioning the nodes takes only a few minutes. The number of each group can also be individually changed or removed at any time.

Using Node Groups in the Kubernetes Cluster

Within the Kubernetes cluster, you can see your new nodes after they have been provisioned and are ready for use.

kubectl get nodes -L magnum.openstack.org/role NAME STATUS ROLES AGE VERSION ROLE k8s-ses-6osreqalftvz-master-0 Ready master 23h v1.18.2 master k8s-ses-6osreqalftvz-node-0 Ready <none> 23h v1.18.2 worker k8s-ses-6osreqalftvz-node-1 Ready <none> 23h v1.18.2 worker k8s-ses-zone-a-vrzkdalqjcud-node-0 Ready <none> 31s v1.18.2 zone-a k8s-ses-zone-a-vrzkdalqjcud-node-1 Ready <none> 31s v1.18.2 zone-a k8s-ses-zone-a-vrzkdalqjcud-node-2 Ready <none> 31s v1.18.2 zone-a k8s-ses-zone-a-vrzkdalqjcud-node-3 Ready <none> 31s v1.18.2 zone-a k8s-ses-zone-a-vrzkdalqjcud-node-4 Ready <none> 31s v1.18.2 zone-a

The node labels magnum.openstack.org/nodegroup and magnum.openstack.org/role bear the name of the node group for nodes that belong to the group. There is also the label topology.kubernetes.io/zone, which carries the name of the Availability Zone.

Deployments or pods can be assigned to nodes or groups with the help of the nodeSelectors:

nodeSelector: magnum.openstack.org/role: zone-a

Would you like to see for yourself how easy a Managed Kubernetes plan is at NWS? Then try it out right now at: https://nws.netways.de/de/kubernetes/

Recent Comments