Load Balancer

Boost your Applications Performance

- High Availability

- Scalability

- Health Monitoring

Ready in minutes

NETWAYS Load Balancer

Hosted and made with love in Germany.

High Availability

Scalability

SSL/TLS Termination

Health Monitoring

Session Persistence

Powered by OpenStack

Load Balancer Pricing

23.00 €

per month

- HA Setup across Availability Zones

- Virtual public IP

Pay-as-you-go | Scale on demand |Cancel at any time

* This is a rough estimate based on our current prices. The expansion of services may lead to increased costs. Please see our pricing page for detailed informations.

Features of NETWAYS Load Balancer

You can deploy multiple application servers, all serving through a single Load Balancer, using a unified DNS name and IP address. This makes scaling easy and your application is available at any time.

Scalability

Scale horizontally and handle increasing or decreasing traffic volume by adding or removing servers dynamically without interruption.

High Availability

Our load balancer setup ensures high availability and fault tolerance by detecting a failed instance. It will failover to a healthy LB Node and redeploy the failed one automatically.

Health Monitoring

A load balancer offers integrated health monitoring by checking the state of all its configured servers. In case of a misbehaving server, the traffic is automatically redirected to functional servers to minimize any potential disruptions.

Use-Case Scenarios

High Availability

Load balancers are often used to ensure high availability and fault tolerance for web applications. In our cloud environment, you can distribute incoming traffic across multiple instances of your application deployed across different availability zones. If one instance or data center experiences downtime due to hardware failure or other issues, the load balancer automatically redirects traffic to healthy instances, minimizing service disruptions. This use case is critical for mission-critical applications where uninterrupted availability is essential.

Scalability and Elasticity

Load balancers play a key role in scaling web applications dynamically to handle fluctuating traffic loads. With cloud scaling features, you can configure load balancers to add or remove instances based on real-time traffic demands. During traffic spikes, new instances can be provisioned and added to the load balancer pool to maintain optimal performance. Conversely, during periods of low traffic, surplus instances can be removed to save costs. This use case is ideal for e-commerce websites and any application with variable traffic patterns.

Your Service – Always Available

Updates without downtime

Direct your incoming traffic to available nodes during a longer period of updates. New versions without downtime pressure.

ISO-27001 certified Datacenters

All data is stored GDPR compliant in ISO-27001 datacenters located in Germany.

Do more with your Load Balancer

Make your cloud project a great fit by choosing only the resources that you need, in exactly the right size and amount, and scaling out and in anytime. Our NETWAYS Cloud Services based on OpenStack offer many compute, storage and network resources designed with newest technology. Create your modern IT infrastructure with ease.

Fly high with our Cloud

Cloud Services are a crucial part of modern computing, offering a flexible and scalable virtual infrastructure. With us, as a GDPR-compliant hosting provider with ISO-27001 certified data centers in Germany, you can launch your reliable Cloud Services in just minutes!

Flexible

Dynamically adapt to changing requirements. We provide the resources, as needed. Ready, whenever you are.

Scalable

We’re built for scale out – and so is our NWS Cloud, based on OpenStack. With us, even sky is not the limit.

Support(ive)

GDPR Compliant

Pay-as-you-go

Get things done with us in a timely manner and pay only for what you use. Saving costs? Sounds savvy.

Focus On You

Usage Examples

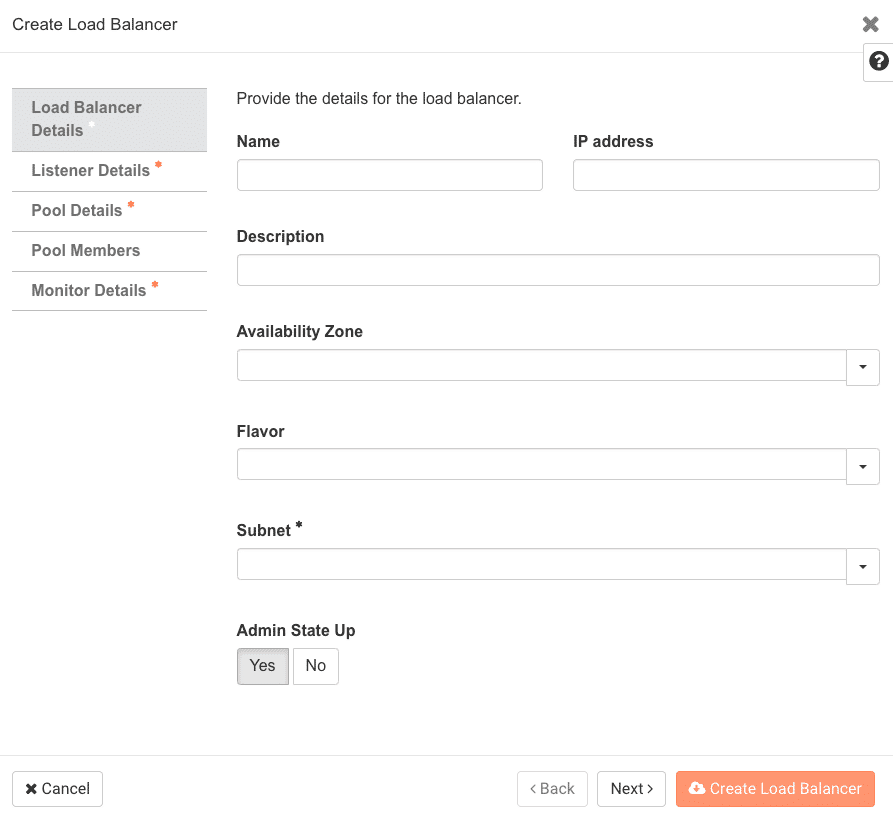

Create Your Load Balancer using a Command Line Interface (CLI), the REST-API, or our Webinterface.

OpenStack CLI

Use the OpenStack CLI command to create a new instance and add some servers to it.

# Create the Load Balancer openstack loadbalancer create --name lb1 --vip-subnet-id public-subnet # Create a Listener openstack loadbalancer listener create --name listener1 --protocol HTTP --protocol-port 80 lb1 # Create a Member-Pool openstack loadbalancer pool create --name pool1 --lb-algorithm ROUND_ROBIN --listener listener1 --protocol HTTP # Add Members to the Pool openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.10 --protocol-port 80 pool1 openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.11 --protocol-port 80 pool1

REST-API

The provided REST-API allows you to create an Load Balancer. There are also libraries for the most popular programming languages available.

curl -X POST -H “Content-Type: application/json” -H “X-Auth-Token: <token>” -d

‘{“loadbalancer”:

{

“description”: “My favorite load balancer”,

“name”: “best_load_balancer”,

“availability_zone”: “my_az”,

}

}’

https://cloud.netways.de:9876/v2/lbaas/loadbalancers

Webinterface

Create your Load Balancer using the NETWAYS Web Services Cloud Web Interface.

(OpenStack Horizon)

Nice to know

What is a Load Balancer?

A load balancer is a type of network device or software that distributes incoming network traffic across multiple servers or computing resources in order to improve application availability, scalability, and performance.

Load balancers are commonly used in high-traffic websites, web applications, and other network-based services to evenly distribute incoming requests across multiple servers or computing resources. This helps to prevent any one server or resource from becoming overloaded, which can cause downtime or slow response times for users.

Is there an impact on performance using a Load Balancer?

There can be a slight impact on performance when using a load balancer, as the load balancer itself introduces a small amount of latency into the network. However, the overall impact on performance is generally minimal, and can often be outweighed by the benefits of improved application availability, scalability, and resilience.

The exact impact on performance will depend on several factors, including the type of load balancer being used, the workload being balanced, the network infrastructure, and the size and complexity of the application. Some load balancers may introduce more latency than others, depending on the algorithms they use to distribute traffic and the resources available to them.

How does a Load Balancer detect an offline node?

Load balancers can perform periodic health checks on each server in the pool to verify that it is responsive and functioning correctly. If a server fails a health check, the load balancer can automatically remove it from the pool and redirect traffic to other available servers.

Who can I contact in Case of a Problem?

Contact Us!

You have any requests or questions, or just want to say ‘hi’? Get in touch with us! We are happy to hear from you! Send us a message and we’ll see you in a bit!