by Daniel Bodky | Jul 25, 2024 | Kubernetes, Tutorials

More and more stateful workloads are making their way into production Kubernetes clusters these days. Thus, chances are that you’re already using persistent volumes or persistent volume claims (PVs/PVCs) in lieu with your deployed workloads.

If you want to thoroughly secure those applications, you need to take care of your data as well. A good first step is to use encrypted storage on Kubernetes, and in this tutorial we will take a look at how to achieve this on MyNWS Managed Kubernetes clusters.

Did you know?

You can follow along the tutorial on your own MyNWS managed cluster or on our interactive playground – it’s completely free!

Prerequisites

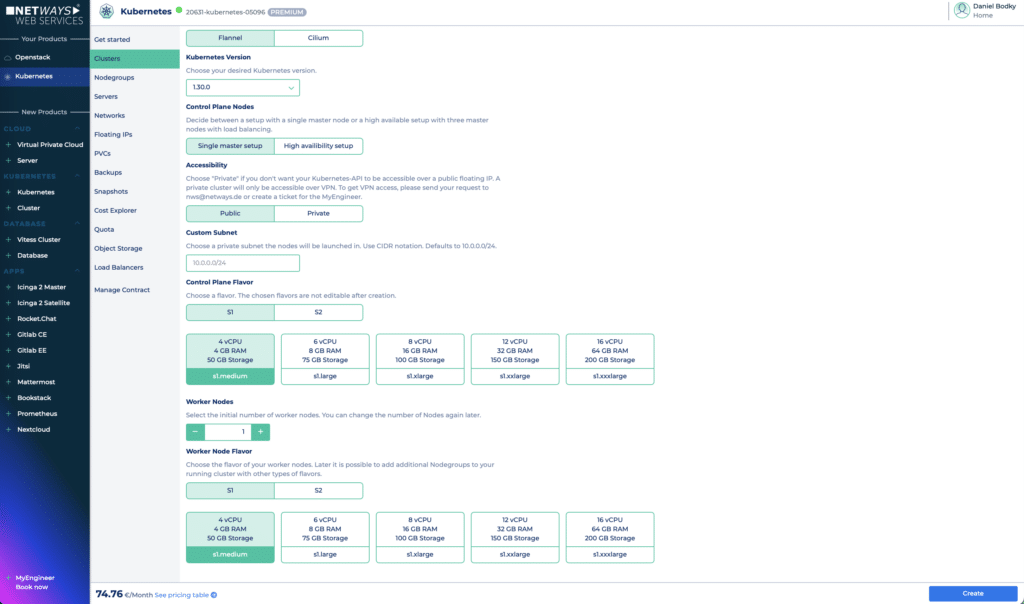

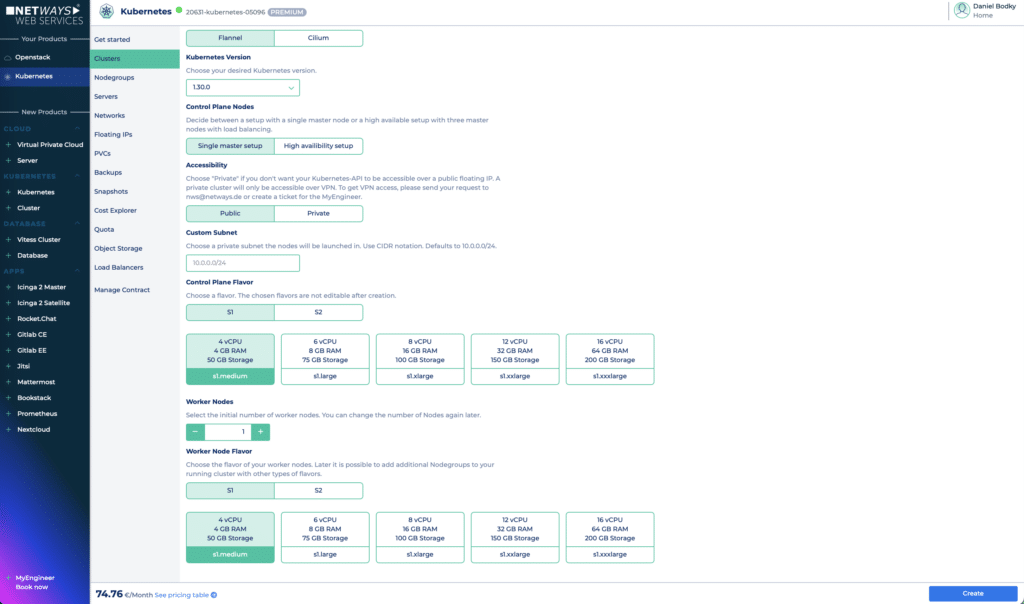

To try out MyNWS encrypted storage on Kubernetes, you will have to create a cluster first. This can be done from the Kubernetes menu on the MyNWS dashboard. For this tutorial, the smallest possible setup, consisting of one control plane node of size s1.medium and one worker node of size s1.medium will suffice. Make sure to make your cluster publicly available so you will be able to reach it from the internet, and click on Create.

To start with encrypted storage on Kubernetes, make sure your settings look similar to the ones on the screenshot.

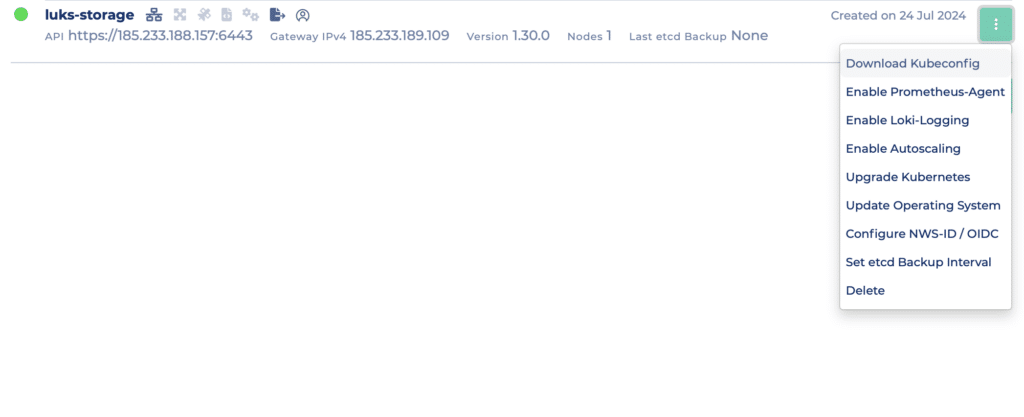

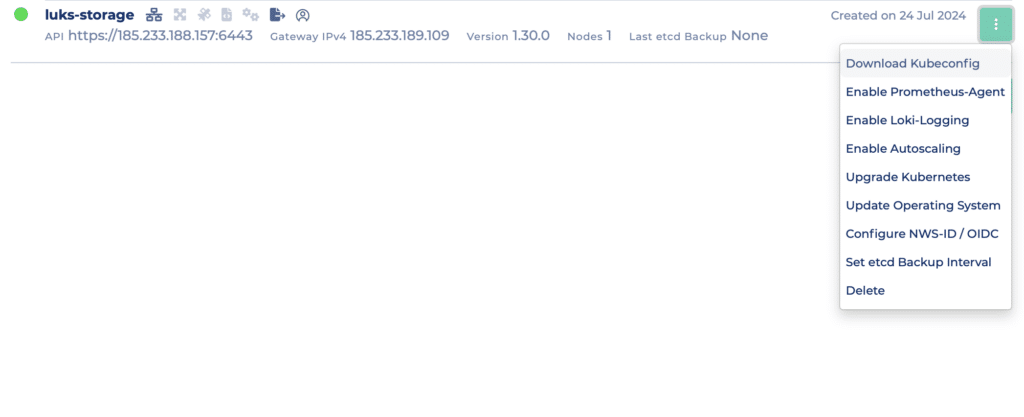

After a few minutes, your cluster will be ready. Download your kubeconfig as shown below (you will be able to choose between an OIDC-based config or an admin one), and we can get started with exploring our storage options.

Download your cluster’s kubeconfig file from the cluster’s context menu.

Inspecting Available Storage Options

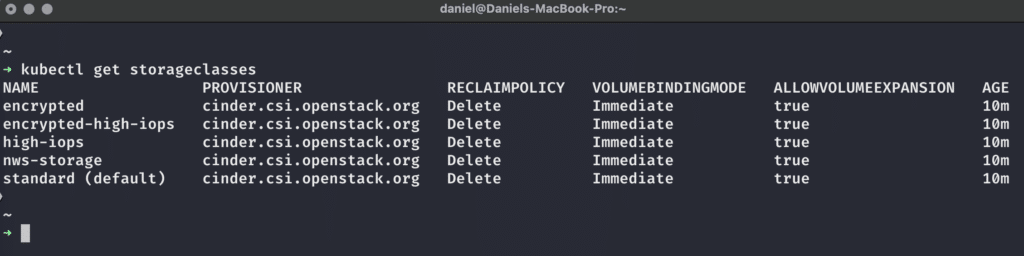

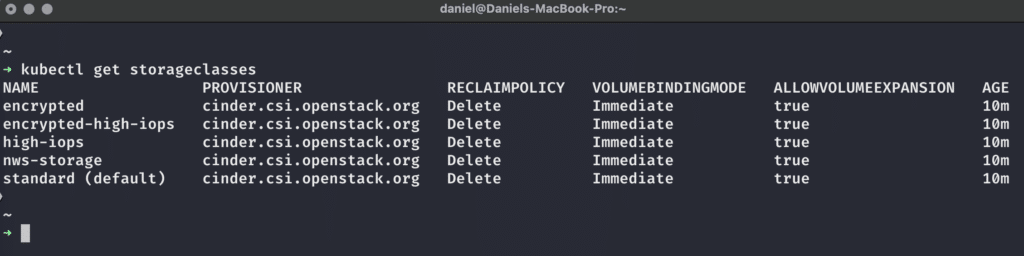

Once we got our kubeconfig file and can connect to our cluster, we can display all StorageClasses available to our cluster with the following command:

You get five different StorageClasses out of the box on MyNWS Managed Kubernetes clusters.

As we can see, all of the available StorageClasses are configured the same way regarding Provisioner, ReclaimPolicy, VolumeBindingMode, and AllowVolumeExpansion – for more information on these matters, please see the official Kubernetes documentation.

The most interesting part for us at the moment is the provisioner – it’s cinder.csi.openstack.org for all of the StorageClasses. This means that behind the scenes, OpenStack will take care of creating, managing, and deleting the PersistentVolumes within our Kubernetes clusters for us.

But what do those StorageClass names mean?

- standard is set as default and will provision an ext4-formatted OpenStack volume with an IOPS limit of 1000 IOPS and bursts of up to 2000 IOPS.

- nws-storage is similar to standard, but formats the OpenStack volume as xfs instead of ext4.

- high-iops is a faster variant of nws-storage, with an IOPS limit of 2000 IOPS and bursts of up to 4000IOPS.

- encrypted leverages an OpenStack volume that offers transparently LUKS encrypted storage, with the IOPS limits of nws-storage

- encrypted-high-iops combines the configurations of high-iops and encrypted

For more information on the available StorageClasses in MyNWS Managed Kubernetes and how to define custom ones, please see our documentation.

This default setup will serve us for a variety of application needs: Applications needing lots of slow and/or fast storage that won’t contain sensible data can use the default, nws-storage, or high-iops StorageClasses, while sensible data can leverage LUKS encrypted storage on Kubernetes with the encrypted(-high-iops) StorageClasses. But how do you use them, and how do they work?

Requesting Encrypted Storage on Kubernetes

Kubernetes provides us with a useful API for requesting storage of any kind of StorageClass programmatically – a PersistentVolumeClaim (PVC). You define it as an object for Kubernetes’ API to consume, e.g. via YAML manifest, and Kubernetes and the external storage provisioners will do the heavy lifting.

In our case, this means that the cinder.csi.openstack.org provisioner will take care of the following things when we request encrypted storage on MyNWS Managed Kubernetes:

- Create encryption keys in OpenStack’s key management system (KMS) Barbican.

- Create a LUKS-encrypted volume in OpenStack

- Make the volume available to our workloads on Kubernetes, already decrypted and ready for use!

Let’s see this in action: Below is an example of a PVC manifest, which we can apply to our cluster using kubectl apply -f <file>:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: encrypted-claim

namespace: default

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 8Gi

storageClassName: encrypted

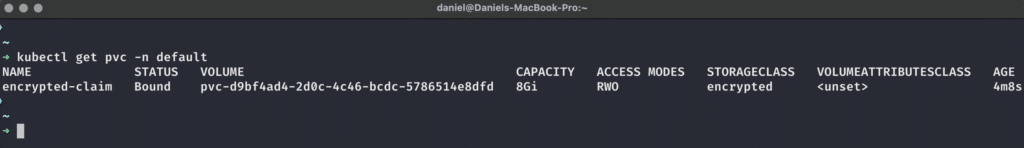

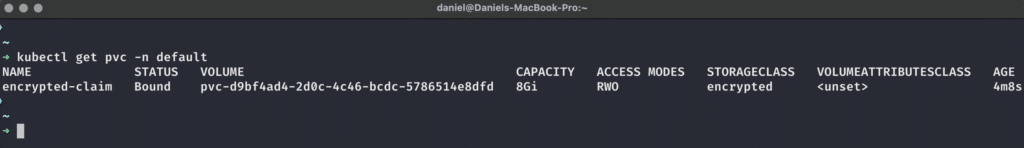

We can check on the PVC using kubectl get pvc -n default, and see that a PVC has been created and bound to a PersistentVolume, which is the actual, encrypted storage provided for us by OpenStack:

This PVC is bound to an encrypted PV of 8GB size.

Let’s see if we can use the volume from inside our workloads!

Accessing Encrypted Storage on Kubernetes

As users of any Managed Kubernetes offering, we probably don’t want to deal with the intricacies of key management systems (KMS), volume provisioners, and always having to deal with encrypting/decrypting our volumes. We just want to use storage with our applications, running on top of Kubernetes. So let’s see if OpenStack and MyNWS Managed Kubernetes allow us to do exactly that by deploying this demo application:

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deployment

namespace: default

spec:

selector:

matchLabels:

app: nginx

replicas: 1

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

volumeMounts:

- name: encrypted-storage

mountPath: /data-encrypted

volumes:

- name: encrypted-storage

persistentVolumeClaim:

claimName: encrypted-claim

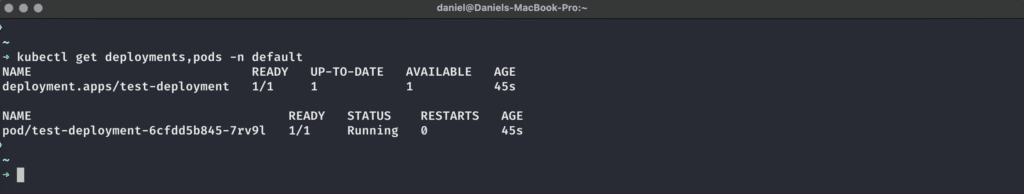

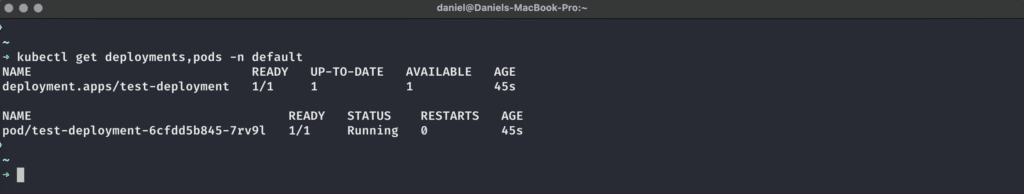

After applying this manifest to our cluster using kubectl apply -f <file>, we can check on the Deployment’s state to see if the Pod could successfully mount the referenced, encrypted PersistentVolumeClaim:

The Deployment and its deployed Pod are up and running – mounting the encrypted volume worked!

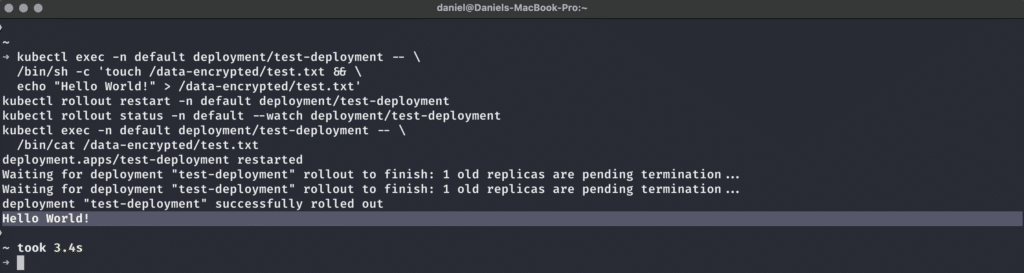

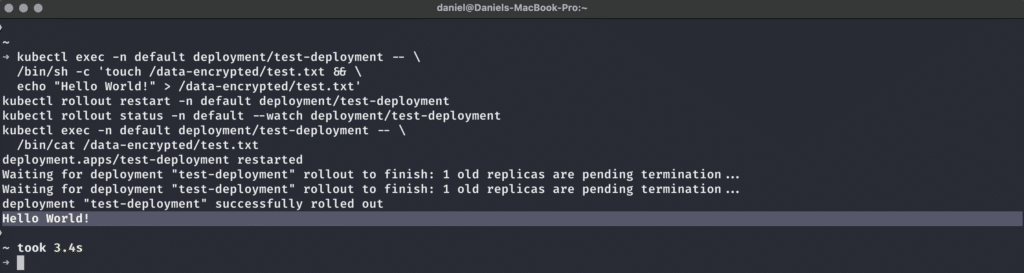

The final test will now be to see if we can read from and write to the supposedly encrypted volume from within our NGINX Pod, simulating our workload. We can do so with this sequence of commands:

kubectl exec -n default deployment/test-deployment -- \

/bin/sh -c 'touch /data-encrypted/test.txt && \

echo "Hello World!" > /data-encrypted/test.txt'

kubectl rollout restart -n default deployment/test-deployment

kubectl rollout status -n default --watch deployment/test-deployment

kubectl exec -n default deployment/test-deployment -- \

/bin/cat /data-encrypted/test.txt

This sequence will…

- Create a file test.txt in our mounted encrypted volume inside the Pod and write Hello World! to it.

- Restart the pod so that we can make sure the data has in fact been persisted.

- Wait for the Deployment to come back up again after the restart.

- Read the contents of the file we created before the restart.

And from the looks of it, it worked! We can in fact utilize encrypted storage on Kubernetes with OpenStack’s LUKS-encrypted volumes, saving us the hassle of putting encryption in place ourselves.

Our data persists across workload restarts thanks to the PVC, which in turn utilizes encrypted storage at rest for improved security.

Towards Higher Security!

While encrypted storage on Kubernetes, backed by OpenStack’s encrypted volume feature, only provides encryption at rest, it’s still another (or first) step towards secure workloads. The best thing is, we don’t have to do a thing manually: Just create a PVC referencing an encrypted Storageclass, and OpenStack will do all the heavy lifting to provide you with encrypted storage on Kubernetes. If you got more questions regarding the technical details of this process, don’t hesitate to get in touch with our MyEngineers!

Of course, there’s more to cloud-native security than just encrypted data. That’s why you should make sure to subscribe to our newsletter and keep an eye open for content on service meshes, policy engines, and other security measures we’d love to explore with you in the future.

by Daniel Bodky | Jul 16, 2024 | Kubernetes

Imagine your company embraced a microservice architecture. As you develop and deploy more and more microservices, you decide to orchestrate them using Kubernetes. You start writing YAML manifests and additional configuration, and deploy necessary tooling to your cluster. At some point, you switch over to Kustomize or Helm, for the sake of configurability.

Maybe you end up using ArgoCD or Flux for a GitOps approach. At some point, Kustomize and Helm don’t cut it anymore – too much YAML, too little overview of what you’re actually doing. You find yourself researching and comparing Kubernetes Deployment tools.

Whether or not you had to imagine this scenario in your head, or lived through it (or are living through it right now) – it’s not a nice place to be in. Helm and Kustomize are reliable, well-established tools, well integrated with the Kubernetes ecosystem. Yet they lack ease or ergonomics of use, and don’t provide great developer experience – it’s time for a successor!

Because you and I aren’t the only ones feeling that way, a handful of tools exist today, all trying to solve different pain points of application delivery on Kubernetes, and I will be comparing those Kubernetes deployment tools for you.

Enter the stage – Timoni, kapp, and Glasskube.

Timoni – Distribution and Lifecycle Management for Cloud-Native Applications

Timoni promises to bring CUE’s type safety, code generation, and data validation features to Kubernetes, making the experience of crafting complex deployments a pleasant journey – which sure sounds nice! But what’s CUE?

CUE is an open-source data validation language with its roots in logic programming. As such, it’s conveniently located at the intersection of describing state, inferring state, and validating state – sounds like a perfect fit for a deployment tool, but how does it work?

Timoni builds on top of four concepts – Modules, Instances, Bundles, and Artifacts, each with their own implications and related actions from the Timoni CLI:

- Timoni Modules are a collection of CUE definitions and constraints that make up a CUE module of an opinionated structure.

These modules accept a set of defined input values from a user and generate a set of Kubernetes manifests as output that gets deployed to your Kubernetes cluster(s) by Timoni.

Timoni Modules can be thought of as equivalents to e.g. Helm Charts.

- Timoni Instances are existing installations of Timoni Modules within your cluster(s). With Timoni, the same Module can be installed to a cluster multiple times, with each Instance having its own set of provided values.

Timoni Instances can be thought of as equivalents to Helm Releases.

- Timoni Bundles allow authors to ship and deploy both, Module references, and Instance values, within the same artifact. This way, applications can be shipped along with their required infrastructure.

Timoni Bundles can be thought of as equivalents to Umbrella Charts in Helm.

- Timoni Artifacts are the intended way of distributing Modules and Bundles. Timoni comes with its own OCI media types and sets additional metadata from Git metadata to enable reproducible builds.

This way, Modules and Bundles can be signed and compared against each other, and securely distributed.

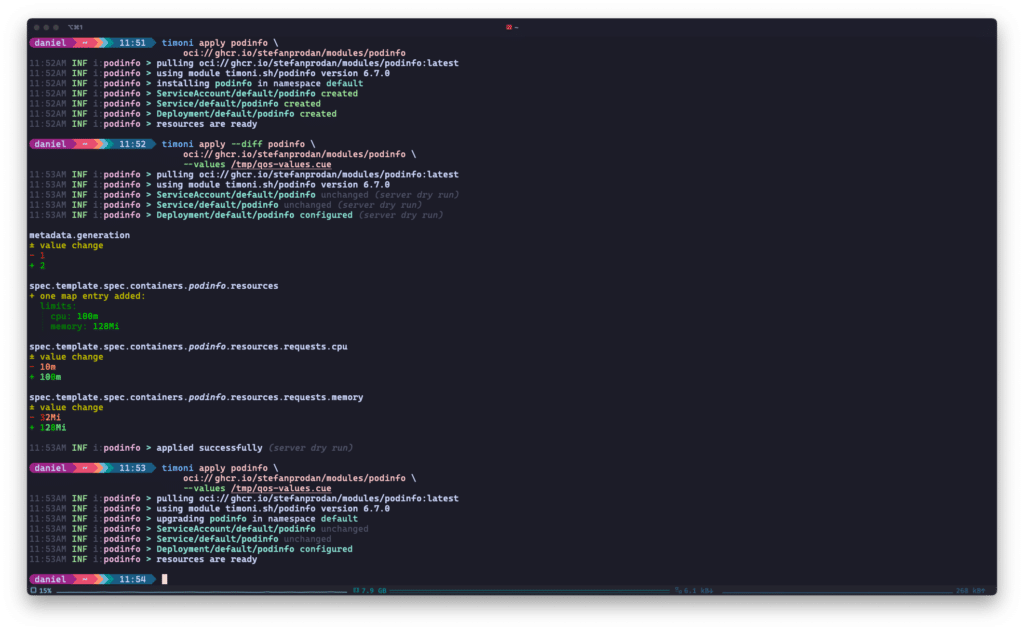

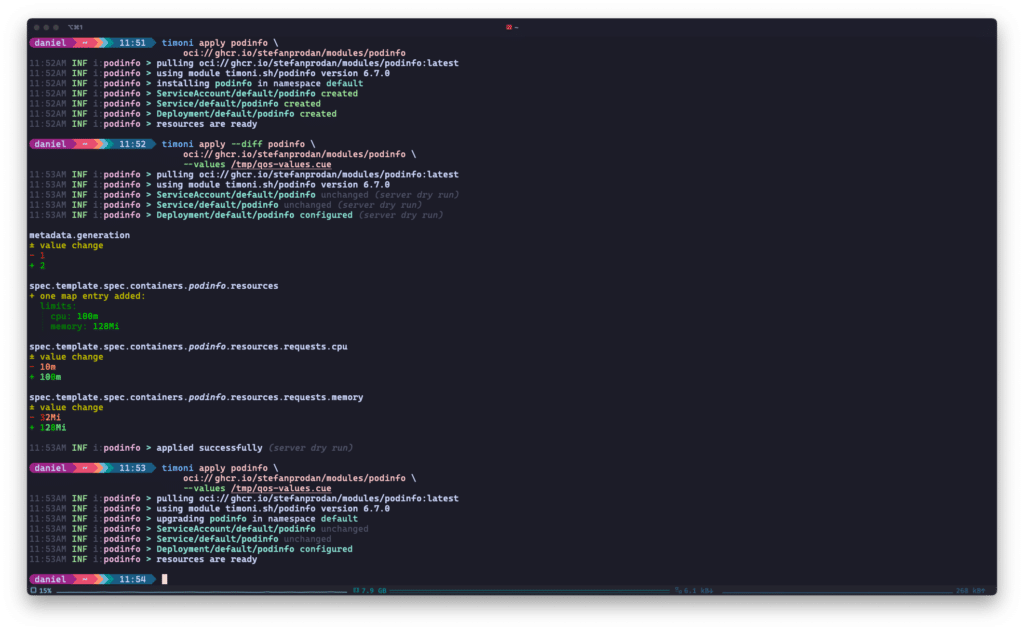

Timoni will list changes to your Kubernetes deployments upon upgrade and only patch resources where needed. The example is taken from Timoni’s Quickstart.

The Takeaway

When comparing Timoni to existing Kubernetes deployment tools such as Helm or Kustomize, a few advantages can be pointed out:

- Speed: Timoni utilizes Kubernetes’ server-side apply in combination with Flux’s drift detection and only patches those manifests that changed in between upgrades.

- Verbosity: Timoni can tell you exactly what it’s going to change, down to the Kubernetes resource and its properties.

- Validation: Due to Modules being a collection of CUE definitions and constraints, Timoni can enforce correct data types or even values (think enums) for Instance deployments.

- Security and Distribution: While Helmcharts can be distributed as OCI artifacts since last year, they still miss reproducibility in some parts. Kustomize doesn’t offer any distribution mechanisms whatsoever.

Timoni thought about these things from the start and offers a streamlined, supported, and secured way of distribution.

Overall, Timoni may be a great choice for you if you’re looking for speed, security, and correctness in your Kubernetes deployment tools. Timoni can deploy large and complex applications consisting of many manifests while surfacing configuration constraints to operators using CUE.

On the downside, CUElang has a learning curve in itself, and it is not ‘GitOps-ready’ in an agnostic way: While there exists a documented way to use Timoni with Flux, there’s no equivalent for e.g. ArgoCD. This will probably change once Timoni’s API matures.

Let’s take a look at kapp next and see how it tackles application deployments to Kubernetes!

kapp – Take Control of Your Kubernetes Resources

kapp is part of Carvel, a set of reliable, single-purpose, composable tools that aid in your application building, configuration, and deployment to Kubernetes. According to the website, it is lightweight, explicit, and dependency-aware. Let’s dig into this:

- lightweight: kapp is a client-side CLI that doesn’t rely on server-side components, thus enabling it to work in e.g. RBAC-constrained environments.

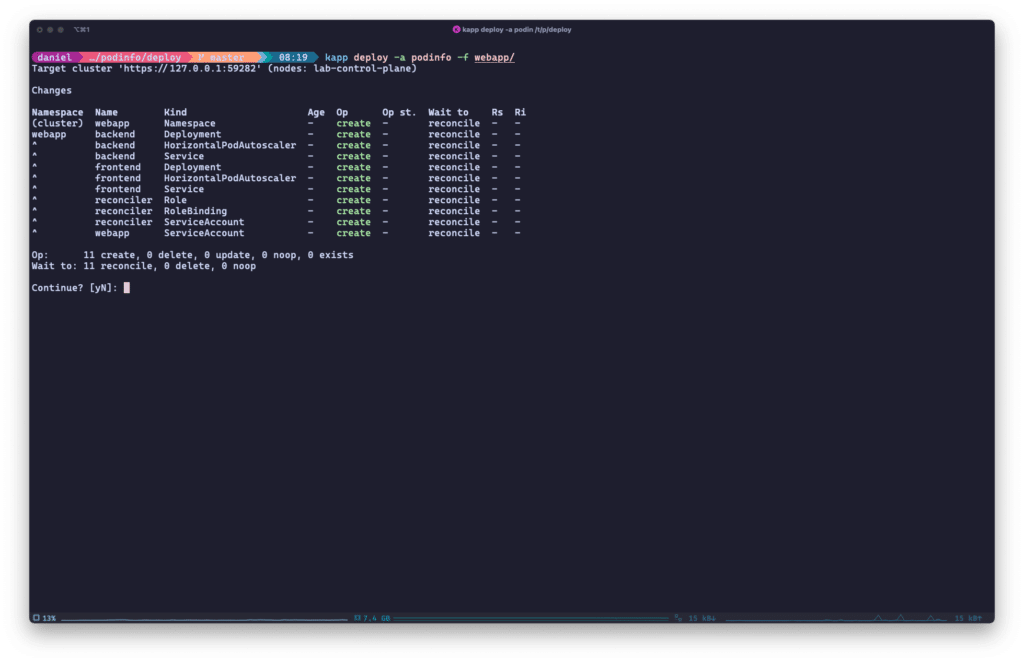

- explicit: kapp will calculate creations, deletions, and updates of resources up front and report them to the user. It’s up to them to confirm those actions.

kapp will also report the reconciliation process throughout the application deployment.

- dependency-aware: kapp orders resources it is supposed to apply. That means that e.g. namespaces or CRDs will be applied before other resources possibly depending on them.

It is possible to add custom dependencies, e.g. to run housekeeping jobs before or after an application upgrade.

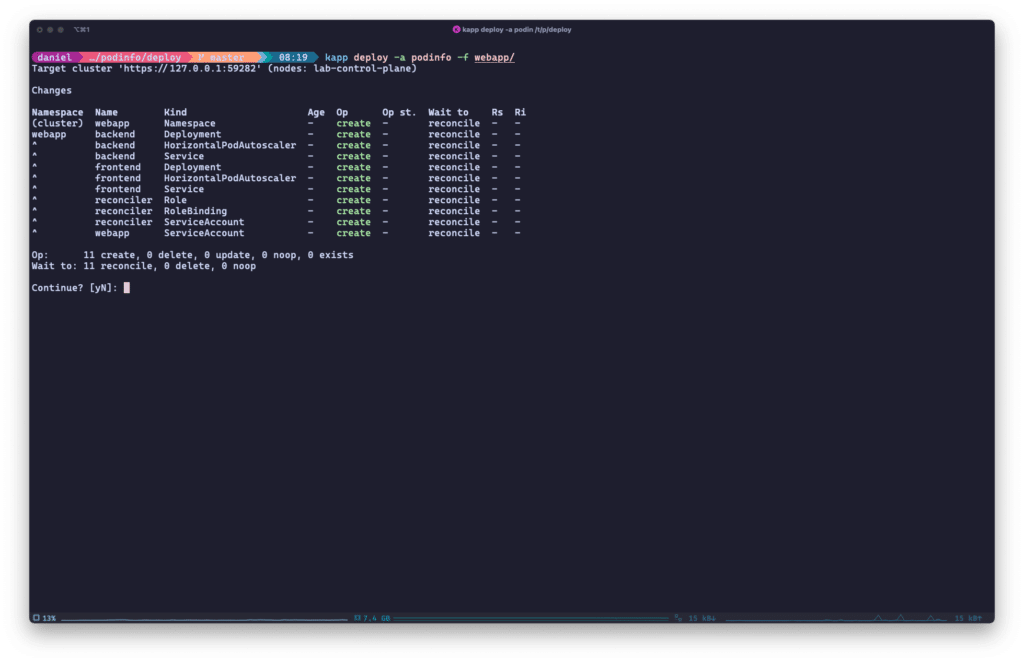

Similar to Timoni, kapp is very verbose about what it’s going to do on your behalf, and guides you through the process of deploying your application(s):

kapp will list all pending actions for the user to confirm before a deployment.

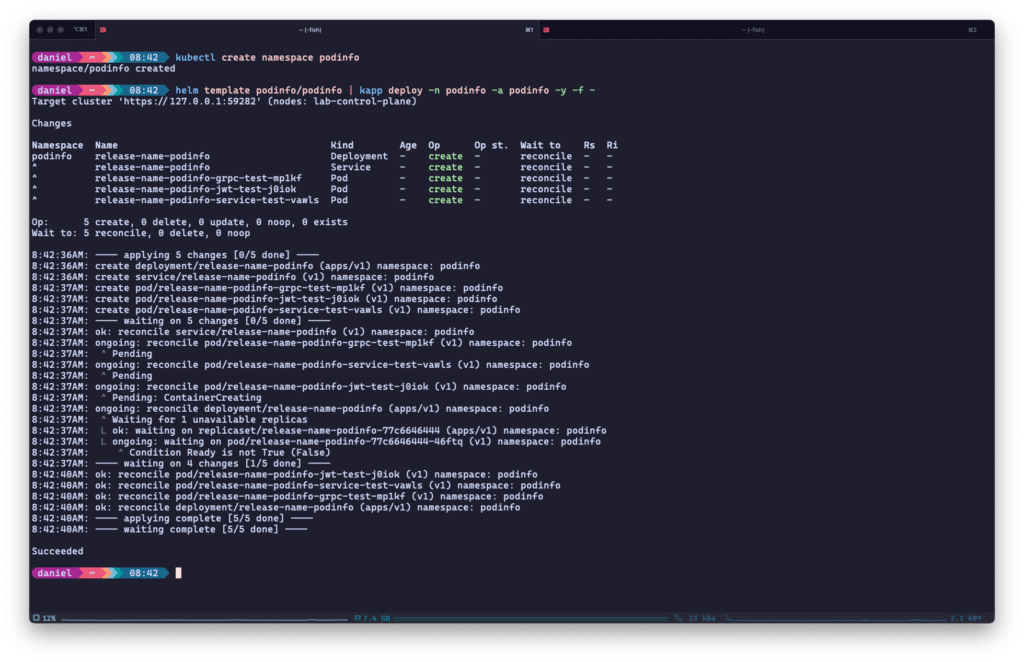

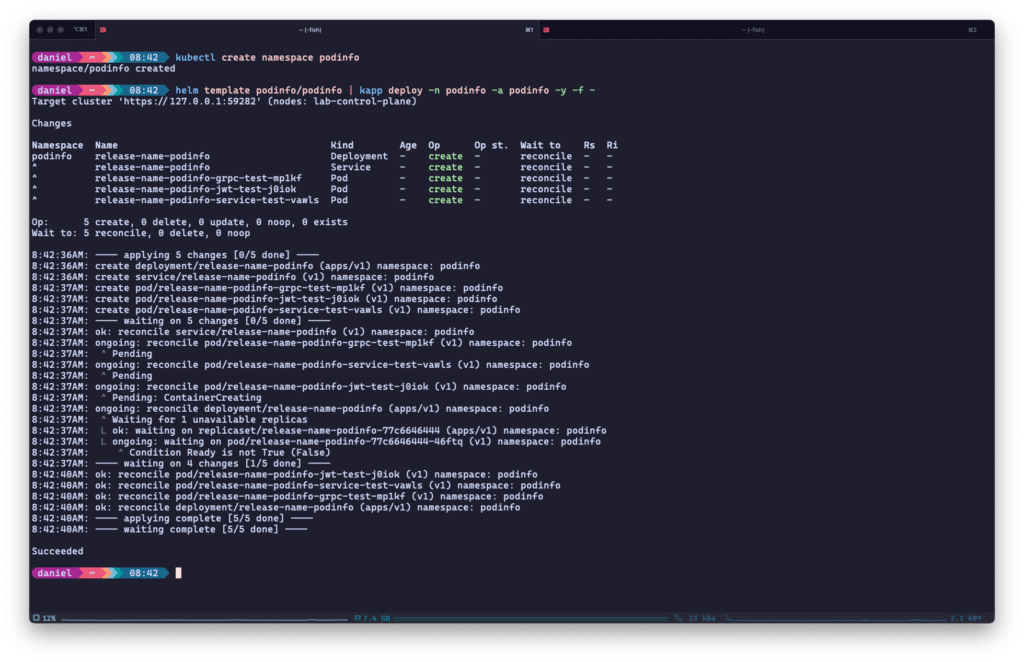

Unlike Timoni, you don’t necessarily have to write bespoke application configuration yourself – kapp can build on top of existing solutions like Kustomize, Helm, or ytt, another Carvel tool for templating and patching YAML. See the below example with the podinfo Helmchart:

kapp can digest and act on the resulting manifests from many other Kubernetes deployment tools like Helm to.

Once deployed, kapp can provide insights about your Kubernetes deployments: From reconciliation state to linked ServiceAccounts or ConfigMaps to the identifying labels of your applications – kapp got you covered.

If you’re more concerned about Day-2 operations and automation, kapp has another ace up its sleeve: kapp-controller. Combine those two Carvel tools and you get a GitOps-ready, fully automated solution for Kubernetes deployments.

kapp-controller introduces a set of CRDs to manage Apps and/or distribute them as Packages, PackageRepositories, and PackageInstallations. Those CRDs in turn can be deployed e.g. by your GitOps solution.

The Takeaway

This set of features makes kapp a solid choice if you’re looking for one or more of the following strengths when comparing Kubernetes deployment tools:

- correctness: kapp will list all pending mutations to your application deployments, be it creation, deletion, or patch, and ask for confirmation by default.

kapp will also keep you updated throughout the reconciliation process and display the state of your deployments at any time.

- ease of adoption: with kapp, there is no need to rewrite existing deployment configurations, be it manifests, Helmcharts, or Kustomize structures.

Just add kapp to your deployment pipeline and capitalize on the value it adds

- GitOps-ready: using kapp-controller combined with kapp, you can utilize declarative CRDs to manage your application deployment.

In addition, you gain the ability to manage different versions of deployments as Packages.

We already looked at two Kubernetes deployment tools so far, but you know what they say: “Third time’s the charm!”

So let’s look at the last tool for today – Glasskube.

Glasskube – the Next Generation Package Manager for Kubernetes

Reading The next generation package manager for Kubernetes surely creates some expectations – but judging by Glasskube’s steep rise to ~2400 GitHub stars and the backing startup’s recent acceptance to the Y Combinator incubator can be interpreted as signs that Glasskube might indeed be the next (or first?) package manager for Kubernetes.

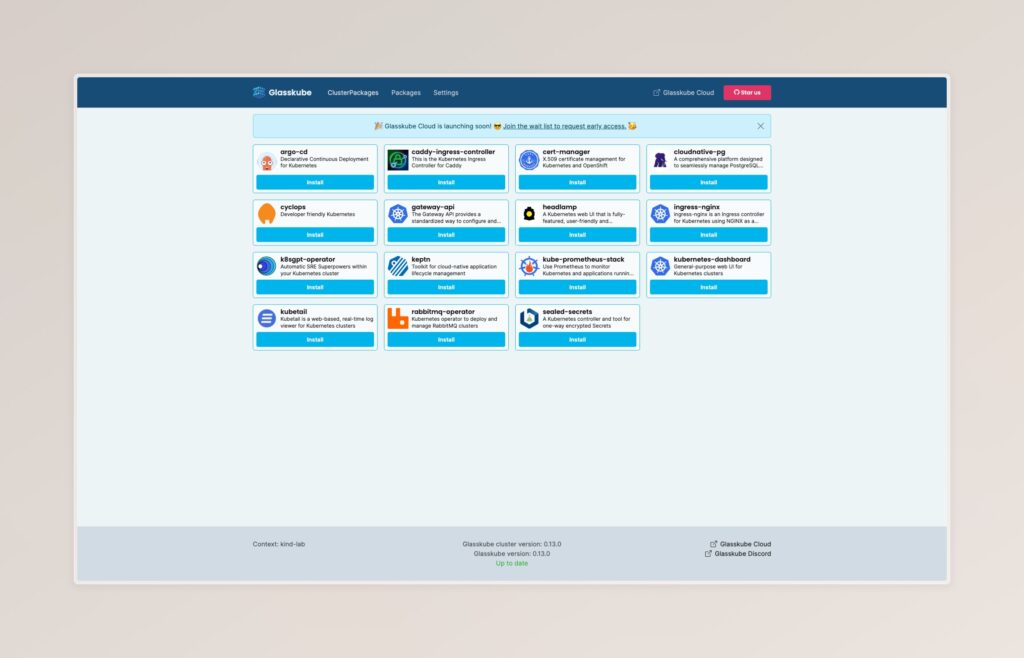

Glasskube is fully open-source (like Timoni and kapp), comes with a built-in UI, and promises to be both, enterprise-ready and gitops-integratable. The project also hosts a public registry of tested and verified packages for everyone to rate and use within their clusters. But let’s get to the technical details, shall we?

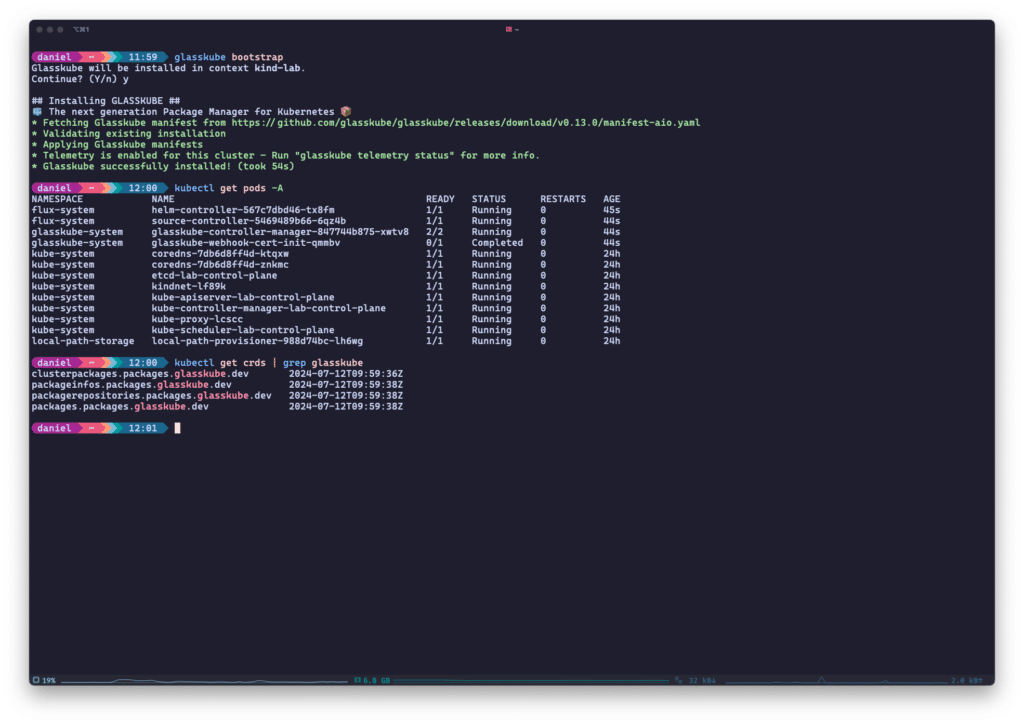

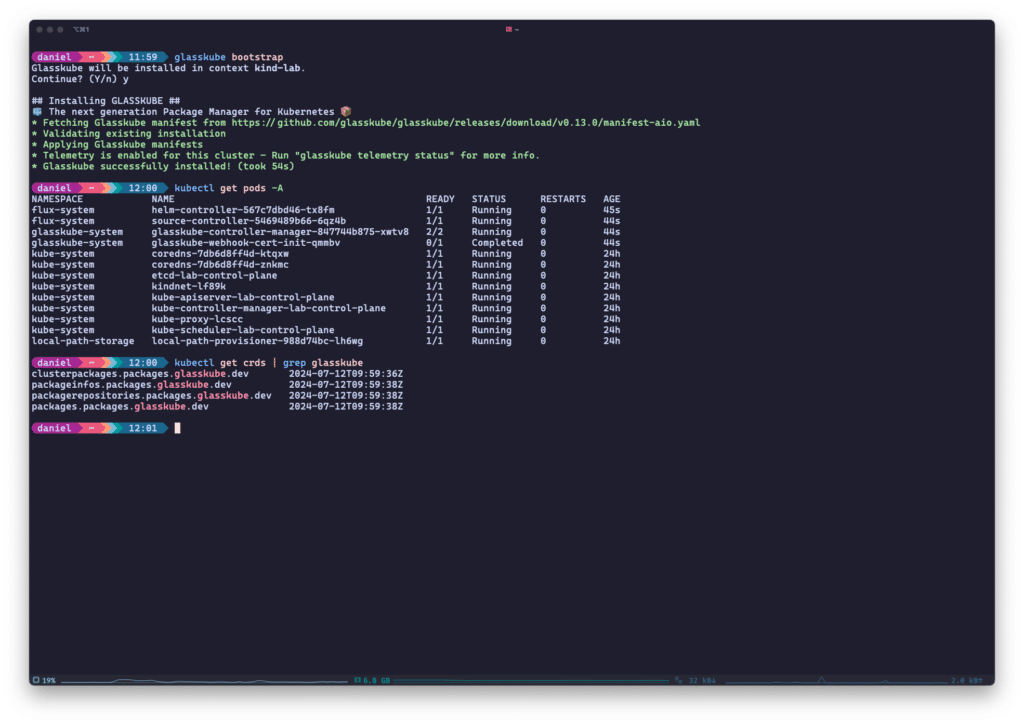

At the core of Glasskube is its CLI which is also needed to bootstrap the in-cluster components of Glasskube. Those are Glasskube’s own controller manager running two controllers, as well as Flux’s Helm and Source controllers. If you are already running Flux within your cluster, you can skip the installation of Flux components altogether.

In addition to these controllers, Glasskube will also install a few CRDs in your cluster which manage packages, repositories, and package installations for you.

Glasskube installs a few controllers and CRDs in your cluster when bootstrapped from the CLI.

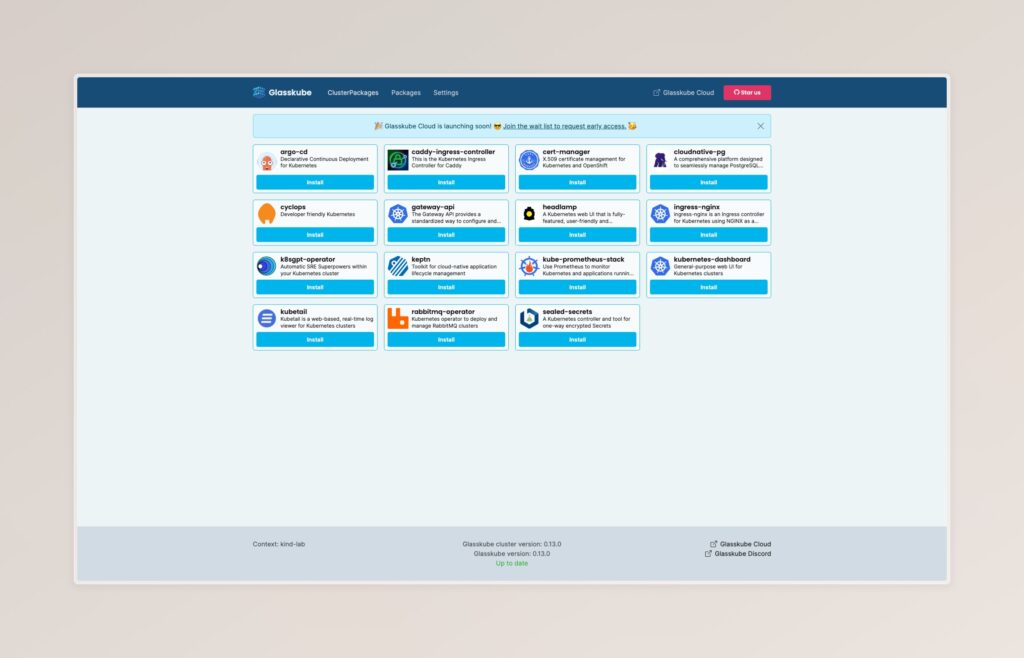

The UI on the other hand doesn’t run within the cluster; it gets spun up locally using the CLI and provides a very straightforward way of installing packages to your cluster.

Glasskube differentiates between ClusterPackages to be installed in a cluster-wide context (e.g. operators), and Packages to be installed in namespaced contexts. Within your cluster, those package installations get persisted as CustomResources. The same is true for registries which you can configure via CLI, including authentication.

Glasskube’s web UI comes with the official registry pre-configured and many (cluster-)packages ready to install.

Just like kapp, Glasskube builds on top of existing solutions – Helm or pure YAML manifests – to deploy packages to Kubernetes. A Glasskube Package defines configurable values, including description, constraints, etc., to be shown to the user in the UI or terminal upon installation. These value definitions support both, value literals as well as lookups sourced from other cluster resources.

Glasskube will receive and validate those values before patching them into the underlying Helm values or YAML manifests.

The Takeaway

With this information, we can again identify a few key points of Glasskube when comparing different Kubernetes deployment tools:

- ease of use: With its automated bootstrap procedure, its client-side UI, and more and more packages available out of the box, Glasskube is perfect for building PoCs and ‘trying things out’.

- dependency management: As a package manager, Glasskube promises fully-fledged dependency management.

This is already a reality, e.g. installing the Keptn Package will also install the cert-manager Package as a dependency.

- GitOps-ready: same as kapp, thanks to its CRDs Glasskube can be used to deploy applications declaratively.

As the project matures, I’d love for the UI to introduce some more features aimed at multi-tenancy and user governance to be added. However, if that doesn’t happen, it will be possible to build bespoke UIs thanks to the underlying CRDs capturing configuration and deployment state.

Quo Vadis, Kubernetes Deployments?

Helm and Kustomize are standardized pillars of the Kubernetes ecosystems. However, a new generation of deployment tools stands on the shoulders of those giants in one way or the other and tries to improve things.

After comparing these three Kubernetes deployment tools, it becomes clear to me that correctness, distribution, and automation are key areas of interest for Timoni, kapp, and Glasskube:

- All three solutions had their way of validating user input and/or making deployment processes more easily comprehensible.

- All three solutions worked on better ways of distributing and consuming applications.

Timoni with its Modules and Bundles, kapp (or rather kapp-controller) with its Package CRD, and Glasskube with its (Cluster-)Package CRDs.

- All three solutions highlight their abilities to integrate with GitOps practices in their documentation.

Automation and declarative configuration of infrastructure and application deployments have become a de-facto standard in Kubernetes, and the new generation of Kubernetes deployment tools knows this.

kapp and Glasskube offer very clear paths of adoption when coming from Helmcharts or YAML manifests – just build on top of your existing solutions, and extend or refactor them over time. Timoni on the other hand comes with the initial cost and learning curve of CUE, with the promise of very high performance and probably the best input validation of the three tools under comparison.

No matter which road you will eventually go down, I hope this article sheds some light on the options available out there today. Make sure to check out some of our other blog posts around Kubernetes, and subscribe to our newsletter to stay in the loop from now on!

by Daniel Bodky | Jun 14, 2024 | Kubernetes

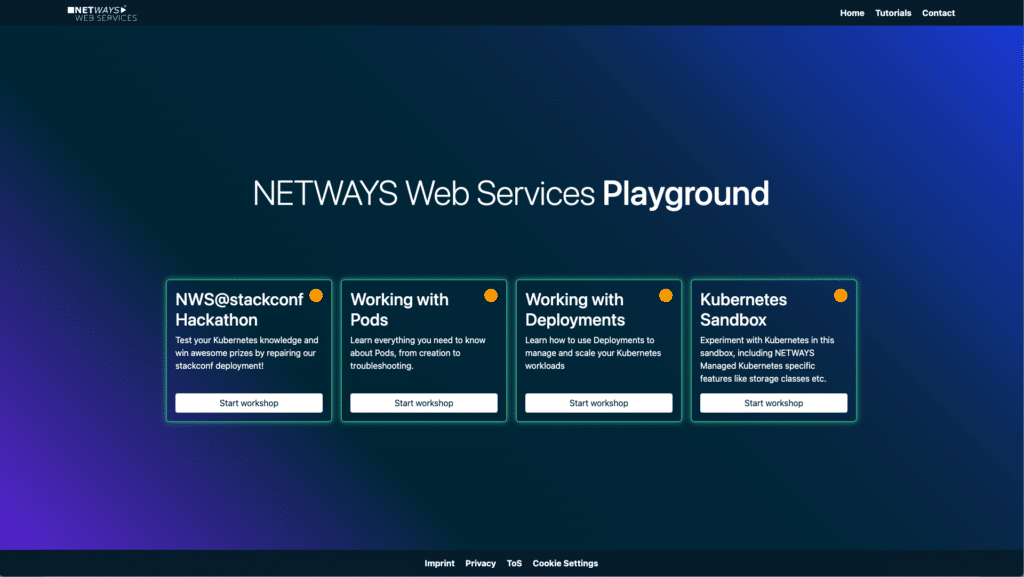

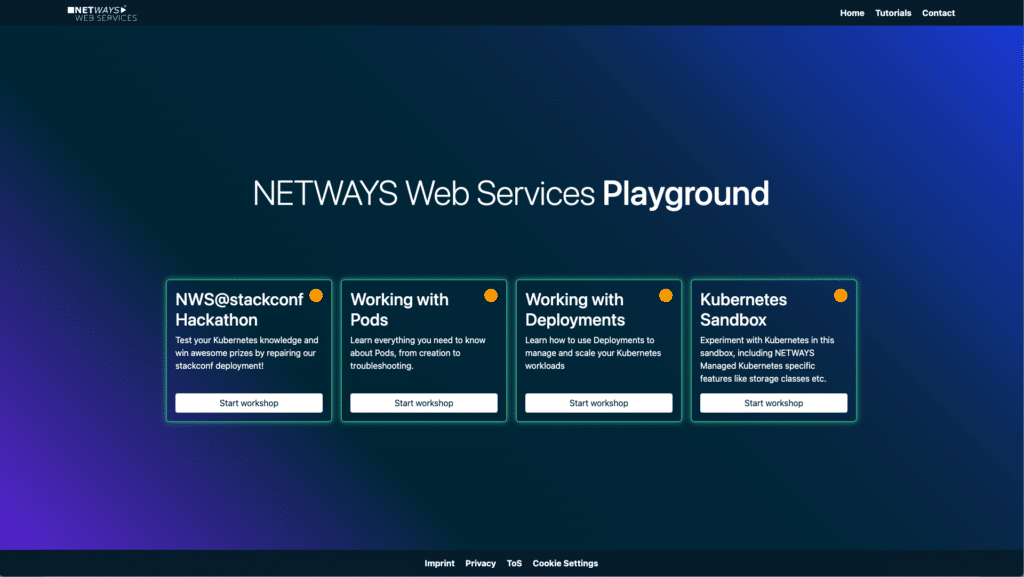

We are thrilled to introduce our brand-new NWS Kubernetes Playground! This interactive platform is designed to help you master cloud-native technologies, build confidence with tools like Kubernetes, and explore a range of NWS offerings – All for free!

What is the NWS Kubernetes Playground?

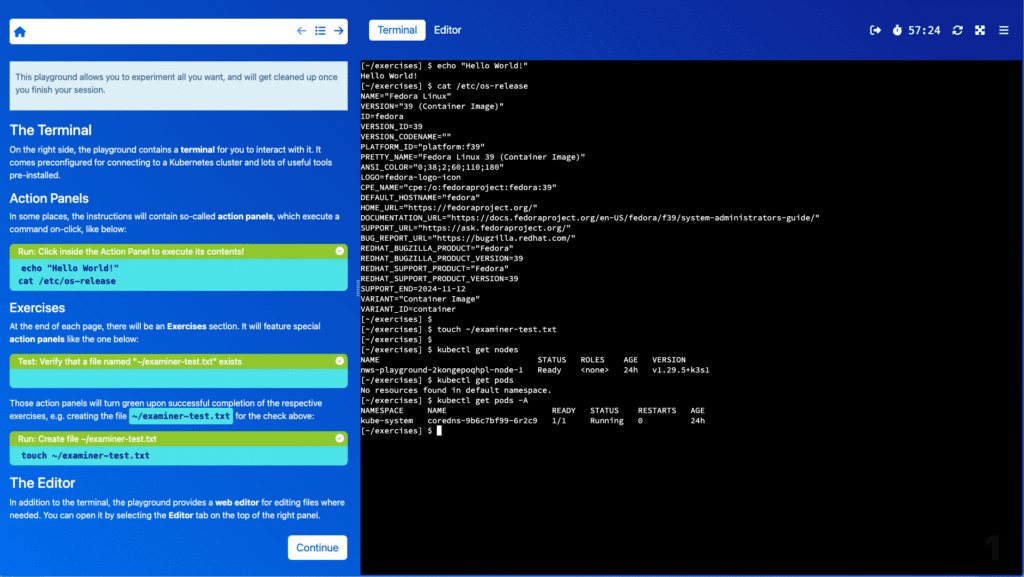

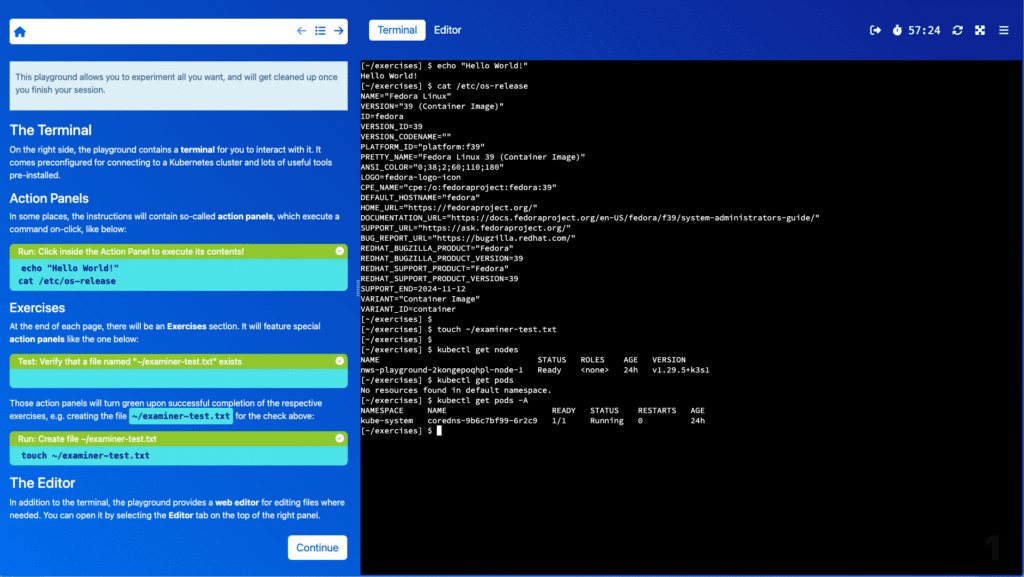

The Kubernetes Playground offers a unique, hands-on learning experience within a fully-fledged, free environment powered by NWS Kubernetes. Each playground comes with workshop-like instructions that guide you through small, bite-sized topics, perfect for learning in short bursts – whenever you’ve got a few minutes. Most sections include interactive tasks and quizzes to test your knowledge and reinforce what you’ve learned.

Hone your cloud-native skills with bite-sized labs around Kubernetes.

What’s Available

At launch, we are excited to offer three initial playgrounds that focus on the fundamentals of Kubernetes:

- Working with Pods:

- Learn everything you need to know about Pods, from creation to troubleshooting.

- Gain hands-on experience and a deep understanding of how Pods function within a Kubernetes environment.

- Working with Deployments:

- Discover how to use Deployments to manage and scale your Kubernetes workloads.

- Master essential concepts and practices to effectively deploy and maintain your applications.

- Kubernetes Sandbox:

- Experiment freely with Kubernetes in this sandbox environment.

- Explore NWS Managed Kubernetes-specific features like storage classes.

- Enjoy a comprehensive space to practice without restrictions.

Getting Started

To dive into the world of Kubernetes, visit our playground and start your journey. Whether you’re following along with the written tutorials from our blog or exploring the Kubernetes Sandbox on your own, our playgrounds offer a practical and immersive learning experience.

Interactive sessions provide information, knowledge checks, and interactive elements to improve your cloud-native skills

The Next Steps

Stay tuned for more! We are continuously developing new playgrounds and will announce new additions in our newsletter. Subscribe to stay up-to-date with the latest offerings and enhancements in our Kubernetes Playground.

With the NWS Kubernetes Playground, you can build a solid foundation in Kubernetes, advance your skills, and confidently navigate the complexities of cloud-native technologies.

For further details and updates, visit our blog and subscribe to our newsletter!

by Daniel Bodky | Apr 18, 2024 | Software as a Service

Last Thursday we released the newest addition to our set of managed services – Prometheus. As a leading open-source monitoring solution, it has exciting features for you in store.

Let’s have a look at what Prometheus is, how it can help you monitor your IT infrastructure, and how you can start it on MyNWS.

What is Prometheus

Prometheus is the leading open-source monitoring solution, allowing you to collect, aggregate, store, and query metrics from a wide range of IT systems.

It comes with its own query language, PromQL, which allows you to visualize and alert on the behavior of your systems.

Being a pillar of the cloud-native ecosystem, it integrates very well with solutions like Kubernetes, Docker, and microservices in general. In addition, you can monitor services such as databases or message queues.

With our managed Prometheus offering, you can leverage its versatility within minutes, without the hassle of setting up, configuring, and maintaining your own environment.

If this is all you need to know and you want to get started right away – the first month is free!

How Does Prometheus Work

Prometheus scrapes data in a machine-readable format from web endpoints, conventionally on the /metrics path of an application.

If a service or system does not provide such an endpoint, there probably exists a metrics exporter that scrapes the data in a different way locally and makes them available via its own endpoint.

The collected metrics get stored by Prometheus itself, aggregated over time, and eventually deleted. With PromQL, you can make sense of your metrics visually, either in Prometheus’ web interface or in Grafana.

How to Use Managed Prometheus on MyNWS

Spinning up Managed Prometheus on MyNWS is as easy as selecting the product from the list, picking a name for your app, choosing a plan, and clicking on Create. Within minutes, your Managed Prometheus app will be up and running – so what has it in store?

Managed Prometheus on MyNWS hits the ground running – below are just the key features it has to offer:

- Web access to Prometheus and Grafana – for quick data exploration and in-depth insights

- User access management based on MyNWS ID – onboard your team

- Prebuilt dashboards and alarms for Kubernetes – visualize insights immediately

- Bring your own domain – and configure it easily via the MyNWS dashboard

- Remote Write capabilities – aggregate data from multiple Prometheus instances in MyNWS

If this sounds good, make sure to give Managed Prometheus a try on MyNWS – the first month is free!

If you have further questions or need assistance along the way, contact our MyEngineers or have a look at our open-source trainings in case you are just starting out with Prometheus.

by Daniel Bodky | Mar 26, 2024 | Events

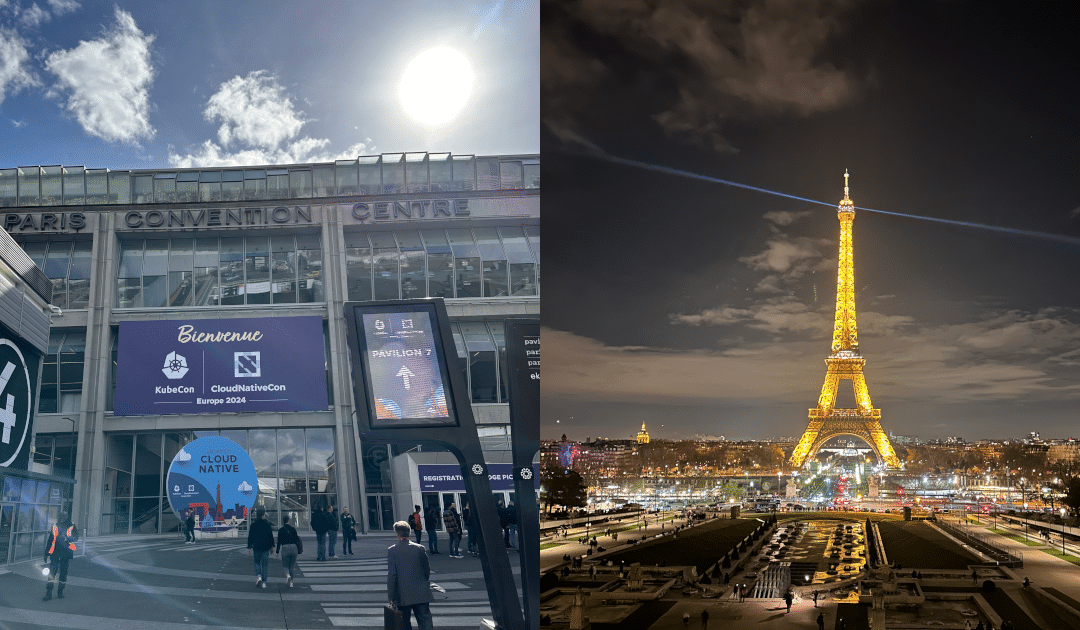

Last week, our team was present at KubeCon 2024 to learn about the latest and greatest in the cloud-native ecosystem. Back in our cozy (home-)offices, we want to share our insights with you! Buckle up, bring your office chairs in an upright position, and let’s start with Rejekts EU, before getting to our KubeCon 2024 review.

Rejekts – The Pre-Conference Conference

I made my way to Paris a few days earlier than the rest of the team in order to make it to Cloud-Native Rejekts. Over the past years, the conference has hosted many great but rejected talks of KubeCon, where acceptance rates were below 10% for this year’s European rendition.

With space for ~300 attendants and 45 talks on 2 tracks, ranging from sponsored keynotes over regular talks to lightning talks on the second day of the conference, Rejekts set the scene for a fantastic cloud-native conference week.

Cloud-Native Rejekts took place at ESpot, a flashy eSports venue.

Many attendees agreed that the less crowded venue combined with high-quality talks made for a very insightful conference – kind of like a Mini-KubeCon. In addition, folks representing projects and companies from pretty much all cloud-native areas of interest were present.

This led to engaging conversations on the hallway track, revolving around community contributions, new technologies, and marketing strategies.

My Personal Favorites

Based on the event schedule, I decided to focus on talks about Cilium, Kubernetes’ rather new Gateway API, and GitOps. I wasn’t disappointed – there were a few gems among the overall great talks:

In Demystifying CNI – Writing a CNI from scratch Filip Nikolic from Isovalent, creators of Cilium, took the audience on a journey to create their own rudimentary CNI – in Bash. From receiving JSON from the container runtime to creating the needed veth pairs to providing IP addresses to pods, it was a concise walkthrough of a real-world scenario.

Nick Young, one of the maintainers of the Kubernetes Gateway API, told us how to not implement CRDs for Kubernetes controllers in his great talk Don’t do what Charlie Don’t Does – Avoiding Common CRD Design Errors by following Charlie Don’t through the process of creating an admittedly horrible CRD specification.

The third talk I want to highlight was From Fragile to Resilient: ValidatingAdmissionPolicies Strengthen Kubernetes by Marcus Noble, who gave a thorough introduction on this rather new type of policy and even looked ahead at MutatingAdmissionPolicies which are currently proposed by KEP 3962.

After a bunch of engaging lightning talks at the end of Day 2, I was ready to meet up with the rest of the team and prepare for the main event – so let’s dive into our KubeCon 2024 review!

KubeCon 2024 – AI, Cilium, and Platforms

KubeCon Paris was huge – the biggest in Europe yet. The organizers had said so in their closing keynote at KubeCon 2023 in Amsterdam already, but still: Seeing 12.000 people gathered in a single space is something else.

Justin, Sebastian, and Achim waiting for KubeCon to start

All of them were awaiting the Day 1 keynotes, which often reflect the tone of the whole conference. This year, the overarching theme was obvious: AI. Every keynote at least mentioned AI, and most of them stated: We’re living in the age of AI.

Interestingly enough, many attendees left the keynotes early, maybe annoyed by the constant stream of news regarding AI we’ve all witnessed over the last year. Maybe also due to the sponsor booths – many of them showcased interesting solutions or at least awesome swag!

What the Team was up to

Maybe they also went off to grab a coffee before attending the first talks of Day 1, of which there were many. Thus, instead of giving you my personal favorites, I asked Justin, Sebastian, and Achim to share their conference highlights and general impressions. Read their KubeCon 2024 review below:

Justin (Systems Engineer):

“I liked the panel discussion about Revolutionizing the Control Plane Management: Introducing Kubernetes Hosted Control Planes best.

As we’re constantly looking for ways to improve our managed Kubernetes offering, we also thought about hiding the control plane from the client’s perspective, so this session was very insightful.

Overall, my first KubeCon has been as I expected it to be – you could really feel how everyone was very interested in and excited about Kubernetes.”

Sebastian (CEO):

“My favorite talk was eBPF’s Abilities and Limitations: The Truth. Liz Rice and John Fastabend are great speakers who explained a very difficult and technical topic with great examples.

I’m generally more interested in core technologies than shiny new tools that might make my live easier – where’d be the fun in that? So eBPF looks very interesting.

Overall, this year’s KubeCon appeared to be better organized than the one in Valencia, in the aftermath of the pandemic. Anyways, no location will beat Valencia’s beach and climate!”

Daniel (Platform Advocate):

“Part of my job is to see how end users consume and build upon Kubernetes. So naturally, I’ve been very excited about the wide range of talks about Platform Engineering going into KubeCon.

My favorite talk was the report on the

State of Platform Maturity in the Norwegian Public Sector by Hans Kristian Flaatten.

I experienced the digitalized platforms of the Norwegian Public Sector back in university as a temporary immigrant, and adoption seemingly improved tremendously since.

As a German citizen, it seems unbelievable for the public sector to adopt cloud-native technologies at this scale.

While I share Sebastian’s views regarding Valencia (which was my first KubeCon), I liked a lot of things about Paris: The city, the venue, and the increase in attendants and talks to choose from.”

Achim (Senior Manager Cloud):

“I really liked From CNI Zero to CNI Hero: A Kubernetes Networking Tutorial Using CNI – the speakers Doug Smith and Tomofumi Hayashi explained pretty much everything there is to know about CNIs.

From building and configuring CNI plugins to their operation at runtime, they covered many important aspects of the CNI project.

Regarding KubeCon in general, I feel like Sebastian – Paris was well organized, apart from that there wasn’t too much change compared to the last years. Many sponsors were familiar, and the overall topics of KubeCon remained the same, too.”

KubeCon 2024 Reviewed

Looking back, we had a blast of a week. Seeing old and new faces of the cloud-native landscape, engaging in great discussions about our favorite technologies, and learning about new, emerging projects are just a few reason why we love attending KubeCon.

We now got one year to implement all of the shiny ‘must-haves’ before we might come back: KubeCon EU 2025 will be held in London from April 1-4 2025.

The next KubeCons will be in London/Atlanta (2025) and Amsterdam/Los Angeles (2026)

If you are working with cloud-native technologies, especially Kubernetes, yourself, and would love to attend KubeCon one day, have a look at our open positions – maybe you’d like to be part of our KubeCon 2025 Review?

If you need a primer on Kubernetes and what it can do for you, head over to our Kubernetes tutorials – we promise they’re great!

Recent Comments